Regression

Regression models describe the relationship between a response (output) variable, and one or more predictor (input) variables. Statistics and Machine Learning Toolbox™ allows you to fit linear, generalized linear, and nonlinear regression models, including stepwise models and mixed-effects models. Once you fit a model, you can use it to predict or simulate responses, assess the model fit using hypothesis tests, or use plots to visualize diagnostics, residuals, and interaction effects.

Statistics and Machine Learning Toolbox also provides nonparametric regression methods to accommodate more complex regression curves without specifying the relationship between the response and the predictors with a predetermined regression function. You can predict responses for new data using the trained model. Gaussian process regression models also enable you to compute prediction intervals.

Regression Basics

Categories

- Regression Learner App

Interactively train, validate, and tune regression models

- Linear Regression

Multiple, stepwise, multivariate regression models, and more

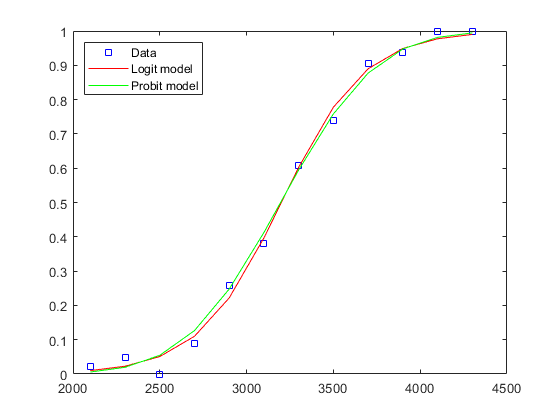

- Generalized Linear Models

Logistic regression, multinomial regression, Poisson regression, and more

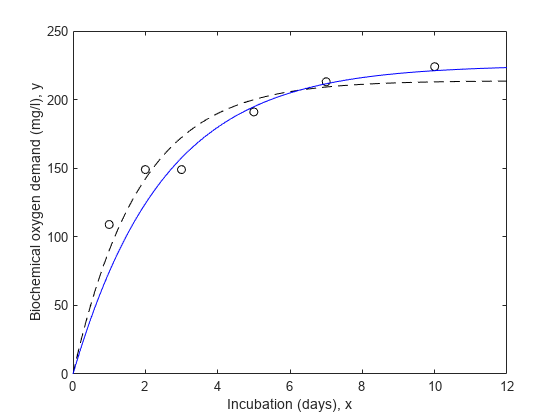

- Nonlinear Regression

Nonlinear fixed- and mixed-effects regression models

- Support Vector Machine Regression

Support vector machines for regression models

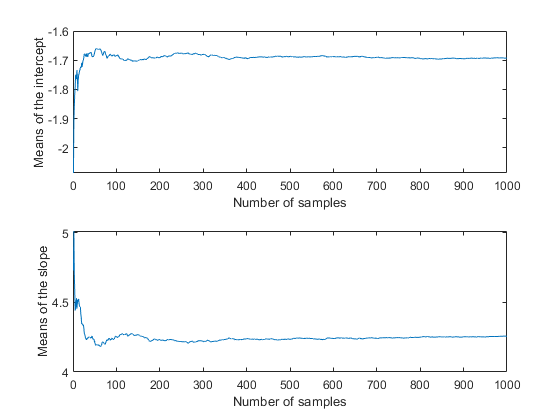

- Gaussian Process Regression

Gaussian process regression models (kriging)

- Regression Trees

Binary decision trees for regression

- Regression Tree Ensembles

Random forests, boosted and bagged regression trees

- Generalized Additive Model

Interpretable model composed of univariate and bivariate shape functions for regression

- Neural Networks

Neural networks for regression

- Incremental Learning

Fit linear model for regression to streaming data and track its performance

- Direct Forecasting

Perform direct forecasting using regularly sampled time series data

- Interpretability

Train interpretable regression models and interpret complex regression models

- Model Building and Assessment

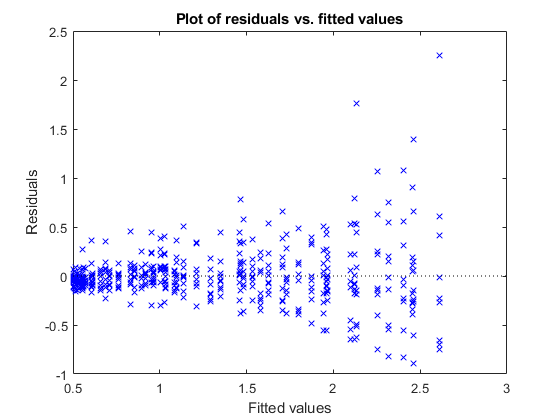

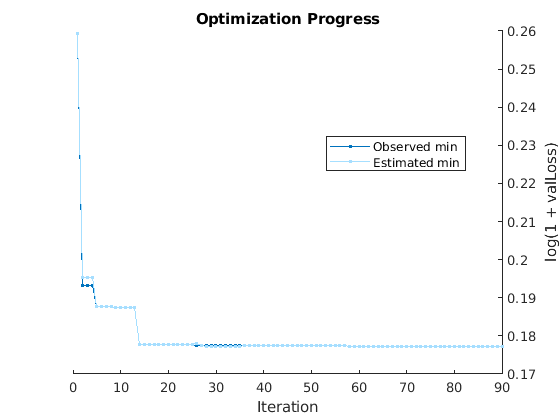

Feature selection, feature engineering, model selection, hyperparameter optimization, cross-validation, residual diagnostics, and plots