Solve a Constrained Nonlinear Problem, Problem-Based

Typical Optimization Problem

This example shows how to solve a constrained nonlinear optimization problem using the problem-based approach. The example demonstrates the typical work flow: create an objective function, create constraints, solve the problem, and examine the results.

Note:

If your objective function or nonlinear constraints are not composed of elementary functions, you must convert the nonlinear functions to optimization expressions using fcn2optimexpr. See the last part of this example, Alternative Formulation Using fcn2optimexpr, or Convert Nonlinear Function to Optimization Expression.

For the solver-based approach to this problem, see Constrained Nonlinear Problem Using Optimize Live Editor Task or Solver.

Problem Formulation: Rosenbrock's Function

Consider the problem of minimizing the logarithm of 1 plus Rosenbrock's function

over the unit disk, meaning the disk of radius 1 centered at the origin. In other words, find that minimizes the function over the set . This problem is a minimization of a nonlinear function subject to a nonlinear constraint.

Rosenbrock's function is a standard test function in optimization. It has a unique minimum value of 0 attained at the point [1,1], and therefore attains the same minimum at the same point. Finding the minimum is a challenge for some algorithms because the function has a shallow minimum inside a deeply curved valley. The solution for this problem is not at the point [1,1] because that point does not satisfy the constraint.

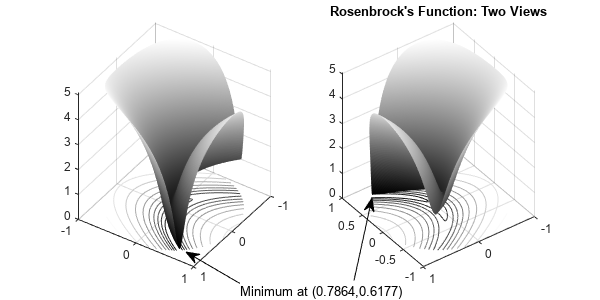

This figure shows two views of the function function in the unit disk. Contour lines lie beneath the surface plot.

rosenbrock = @(x)log(1 + 100*(x(:,2) - x(:,1).^2).^2 + (1 - x(:,1)).^2); % Vectorized function figure1 = figure('Position',[1 200 600 300]); colormap('gray'); axis square; R = 0:.002:1; TH = 2*pi*(0:.002:1); X = R'*cos(TH); Y = R'*sin(TH); Z = rosenbrock([X(:),Y(:)]); % Z = f(x) Z = reshape(Z,size(X)); % Create subplot subplot1 = subplot(1,2,1,'Parent',figure1); view([124 34]); grid('on'); hold on; % Create surface surf(X,Y,Z,'Parent',subplot1,'LineStyle','none'); % Create contour contour(X,Y,Z,'Parent',subplot1); % Create subplot subplot2 = subplot(1,2,2,'Parent',figure1); view([234 34]); grid('on'); hold on % Create surface surf(X,Y,Z,'Parent',subplot2,'LineStyle','none'); % Create contour contour(X,Y,Z,'Parent',subplot2); % Create textarrow annotation(figure1,'textarrow',[0.4 0.31],... [0.055 0.16],... 'String',{'Minimum at (0.7864,0.6177)'}); % Create arrow annotation(figure1,'arrow',[0.59 0.62],... [0.065 0.34]); title("Rosenbrock's Function: Two Views") hold off

The rosenbrock function handle calculates the function at any number of 2-D points at once. This Vectorization speeds the plotting of the function, and can be useful in other contexts for speeding evaluation of a function at multiple points.

The function is called the objective function. The objective function is the function you want to minimize. The inequality is called a constraint. Constraints limit the set of over which a solver searches for a minimum. You can have any number of constraints, which are inequalities or equations.

Define Problem Using Optimization Variables

The problem-based approach to optimization uses optimization variables to define objective and constraints. There are two approaches for creating expressions using these variables:

For elementary functions such as polynomials or trigonometric functions, write expressions directly in the variables.

For other types of functions, convert functions to optimization expressions using

fcn2optimexpr. See Alternative Formulation Usingfcn2optimexprat the end of this example.

For this problem, both the objective function and the nonlinear constraint are elementary, so you can write the expressions directly in terms of optimization variables. Create a 2-D optimization variable named 'x'.

x = optimvar('x',1,2);Create the objective function as an expression of the optimization variable.

obj = log(1 + 100*(x(2) - x(1)^2)^2 + (1 - x(1))^2);

Create an optimization problem named prob having obj as the objective function.

prob = optimproblem('Objective',obj);Create the nonlinear constraint as a polynomial of the optimization variable.

nlcons = x(1)^2 + x(2)^2 <= 1;

Include the nonlinear constraint in the problem.

prob.Constraints.circlecons = nlcons;

Review the problem.

show(prob)

OptimizationProblem :

Solve for:

x

minimize :

log(((1 + (100 .* (x(2) - x(1).^2).^2)) + (1 - x(1)).^2))

subject to circlecons:

(x(1).^2 + x(2).^2) <= 1

Solve Problem

To solve the optimization problem, call solve. The problem needs an initial point, which is a structure giving the initial value of the optimization variable. Create the initial point structure x0 having an -value of [0 0].

x0.x = [0 0]; [sol,fval,exitflag,output] = solve(prob,x0)

Solving problem using fmincon. Local minimum found that satisfies the constraints. Optimization completed because the objective function is non-decreasing in feasible directions, to within the value of the optimality tolerance, and constraints are satisfied to within the value of the constraint tolerance.

sol = struct with fields:

x: [0.7864 0.6177]

fval = 0.0447

exitflag =

OptimalSolution

output = struct with fields:

iterations: 23

funcCount: 44

constrviolation: 0

stepsize: 7.7862e-09

algorithm: 'interior-point'

firstorderopt: 8.0000e-08

cgiterations: 8

message: 'Local minimum found that satisfies the constraints....'

bestfeasible: [1x1 struct]

objectivederivative: "reverse-AD"

constraintderivative: "closed-form"

solver: 'fmincon'

Examine Solution

The solution shows exitflag = OptimalSolution. This exit flag indicates that the solution is a local optimum. For information on trying to find a better solution, see When the Solver Succeeds.

The exit message indicates that the solution satisfies the constraints. You can check that the solution is indeed feasible in several ways.

Check the reported infeasibility in the

constrviolationfield of theoutputstructure.

infeas = output.constrviolation

infeas = 0

An infeasibility of 0 indicates that the solution is feasible.

Compute the infeasibility at the solution.

infeas = infeasibility(nlcons,sol)

infeas = 0

Again, an infeasibility of 0 indicates that the solution is feasible.

Compute the norm of

xto ensure that it is less than or equal to 1.

nx = norm(sol.x)

nx = 1.0000

The output structure gives more information on the solution process, such as the number of iterations (23), the solver (fmincon), and the number of function evaluations (44). For more information on these statistics, see Tolerances and Stopping Criteria.

Alternative Formulation Using fcn2optimexpr

For more complex expressions, write function files for the objective or constraint functions, and convert them to optimization expressions using fcn2optimexpr. For example, the basis of the nonlinear constraint function is in the disk.m file:

type diskfunction radsqr = disk(x) radsqr = x(1)^2 + x(2)^2;

Convert this function file to an optimization expression.

radsqexpr = fcn2optimexpr(@disk,x);

Furthermore, you can also convert the rosenbrock function handle, which was defined at the beginning of the plotting routine, into an optimization expression.

rosenexpr = fcn2optimexpr(rosenbrock,x);

Create an optimization problem using these converted optimization expressions.

convprob = optimproblem('Objective',rosenexpr,'Constraints',radsqexpr <= 1);

View the new problem.

show(convprob)

OptimizationProblem :

Solve for:

x

minimize :

log(((1 + (100 .* (x(2) - x(1).^2).^2)) + (1 - x(1)).^2))

subject to :

(x(1).^2 + x(2).^2) <= 1

Solve the new problem. The solution is essentially the same as before.

[sol,fval,exitflag,output] = solve(convprob,x0)

Solving problem using fmincon. Local minimum found that satisfies the constraints. Optimization completed because the objective function is non-decreasing in feasible directions, to within the value of the optimality tolerance, and constraints are satisfied to within the value of the constraint tolerance.

sol = struct with fields:

x: [0.7864 0.6177]

fval = 0.0447

exitflag =

OptimalSolution

output = struct with fields:

iterations: 23

funcCount: 44

constrviolation: 0

stepsize: 7.7862e-09

algorithm: 'interior-point'

firstorderopt: 8.0000e-08

cgiterations: 8

message: 'Local minimum found that satisfies the constraints....'

bestfeasible: [1x1 struct]

objectivederivative: "reverse-AD"

constraintderivative: "closed-form"

solver: 'fmincon'

For the list of supported functions, see Supported Operations for Optimization Variables and Expressions.