Identify unexpected events and departures from normal behavior using anomaly detection

Anomaly detection is the process of identifying events or patterns that differ from expected behavior. Anomaly detection can range from simple outlier detection to complex machine learning algorithms trained to uncover hidden patterns across hundreds of signals.

Engineers and data scientists use anomaly detection to identify:

- Faults in machinery for predictive maintenance

- Defects in manufacturing production lines

- Cancer in radiology images

- Fraud in financial transactions

- Customer churn in retail

- Unusual movements in video surveillance footage

There are many ways to design anomaly detection algorithms in MATLAB®. The anomaly detection approach most suitable for a given application will depend on the amount of anomalous data available, and whether you can distinguish anomalies from normal data.

The first step in anomaly detection is to examine the data you have. Consider the following questions:

Can you see anomalies in the raw data?

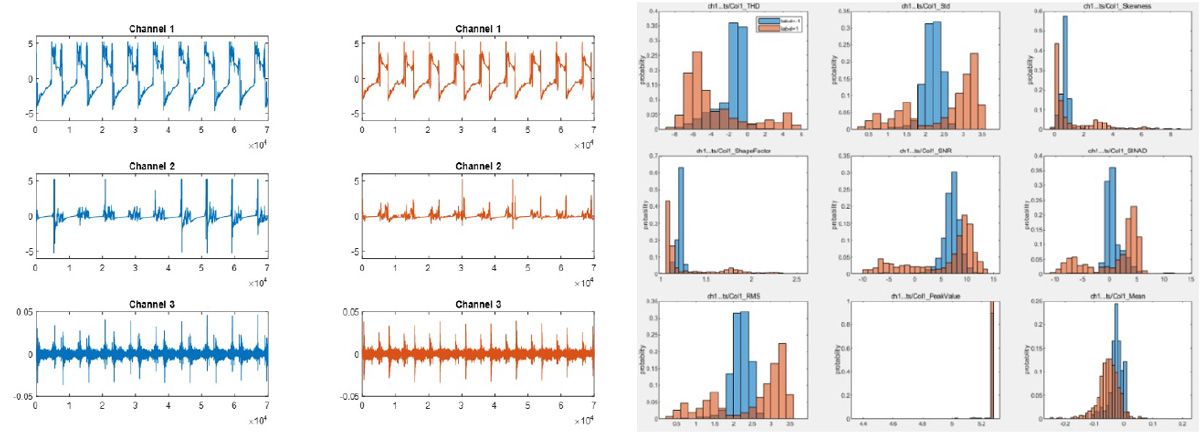

Sometimes you can perform anomaly detection just by looking at your data. For example, the signals in Figure 1 below were collected from a fan, and you can easily see the abrupt signal changes that indicate anomalies in the fan behavior. If you are able to detect anomalies by eye, you may be able to use a simple algorithm such as findchangepts or controlchart for anomaly detection.

Figure 1: A MATLAB plot of data from a cooling fan, showing anomalies that are easy to spot.

Can you see anomalies in derived features?

Anomalies are often difficult to detect visually from raw data. In the signals below, it is difficult to determine which of the time domain signals is anomalous. However, if you create a power spectrum to view the data in the frequency domain, differences in the frequency and magnitude of the peaks clearly show that the two signals are quite different. In such cases, you can use these peaks as feature inputs to an anomaly detection algorithm based on supervised learning methods.

Figure 2: Although anomalies are not visually apparent in the raw time series signals (left), viewing the data in the frequency domain (right, using a periodogram in MATLAB) shows clear differences in the peak frequencies.

Can you statistically separate normal and anomalous features?

Anomalies are not always apparent in a single signal. Today’s complex machines can have hundreds of sensors, and sometimes anomalies become apparent only when considering multiple sensors at once. When you have labeled data, you can examine statistical distributions of time- and frequency-domain features, as shown in Figure 3. You may also perform feature transformation and ranking to identify the features that best separate the two groups. Then, you can use these features to train an anomaly detection algorithm on the labeled data using supervised learning.

Figure 3: On the left, the MATLAB plots represent paired normal and anomalous data, in blue and red, respectively. On the right, corresponding feature histograms from the Diagnostic Feature Designer are used to identify which features clearly separate normal and anomalous data for supervised anomaly detection algorithms.

What if you don’t know what an anomaly looks like?

Machinery downtime is expensive, so operators often aim to prevent problems with a conservative maintenance schedule. This can mean anomalies are rare, which makes designing an anomaly detection algorithm tricky.

Several approaches to designing anomaly detection algorithms require little or no anomalous data. These “normal-only” methods train an algorithm on normal data only, and identify data outside those norms as anomalous.

With MATLAB, you can apply the following normal-only anomaly detection approaches to your data:

Thresholding. Thresholding identifies an anomaly when data exceeds a threshold on a statistical metric. Examples include the standard deviation over recent windows in time series data, using a control chart on a signal, finding abrupt changes in a signal using change point detection, or obtaining robust estimates of the data distribution and identifying samples on the fringes of the distribution as anomalies. Thresholding on statistical metrics can be a good start, but this approach is more difficult to apply to multivariate data and less robust than machine learning approaches to anomaly detection. Statistical estimates that are robust to outliers will yield better results, such as robust covariance.

One-Class Support Vector Machines. One-class support vector machines identify separating hyper planes that maximize the distance between classes. Training only one class yields a model of data that can be considered normal, which allows you to detect anomalies without having any labeled anomalies available for training. This approach and other distance-based methods require numeric features as input and will not work well on high-dimensional data.

Isolation Forests. Isolation forests build trees that isolate each observation into a leaf, and an anomaly score is computed as the average depth to your sample: anomalous samples take fewer decisions than normal ones. This method supports a mix of numeric and categorical features and works on high-dimensional data.

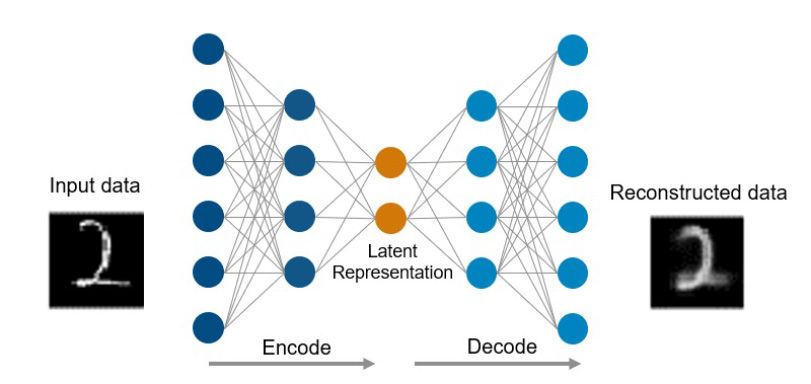

Autoencoders. Autoencoders are neural networks trained on normal data that attempt to reconstruct the original input. The trained autoencoder will reconstruct a normal input accurately. A large difference between the input and its reconstruction could indicate an anomaly. Autoencoders can be used for both signal and image data.

Figure 4: Autoencoders are trained to replicate inputs. The differences between the input and its reconstruction can be used for anomaly detection in signal or image data.

When you have anomalies in your data but cannot label them, you can also try unsupervised clustering approaches to anomaly detection. Sometimes you can associate clusters with normal and anomalous data, but unless your dataset is balanced (containing many anomalies of the same type), useful results are more likely with the normal-only methods.

Prior to applying anomaly detection algorithms, you often need to extract features from your raw data. MATLAB supports both manual and automated methods for extracting features from signal, image, and text data. The Diagnostic Feature Designer in Predictive Maintenance Toolbox™ can help you extract features from many types of signals.

Key Points

- Anomaly detection helps you identify outliers, deviations from normal, and unexpected behaviors

- If you have sufficient labeled data (including anomalies), use supervised learning for anomaly detection

- If you have mostly normal data, apply one of the specialized normal-only anomaly detection approaches

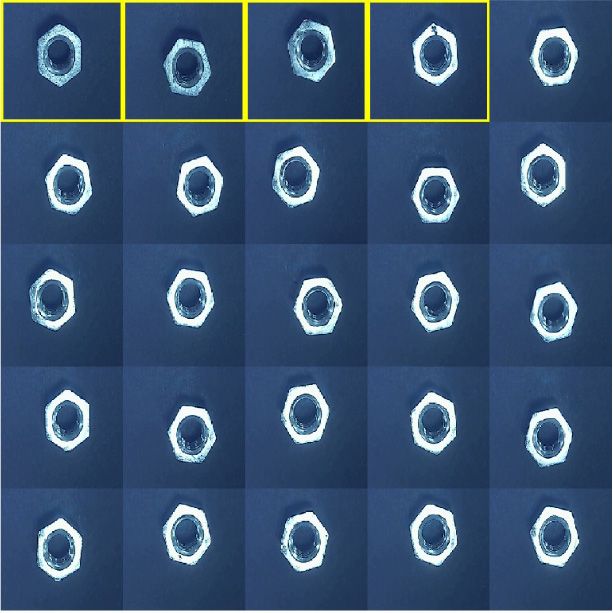

Detect Object Defects in Images with MATLAB

To build an image-based anomaly detection algorithm, you can: 1) feed your images through the pretrained AlexNet convolutional neural network, 2) use the network activations after the first layers as features, and then 3) train a one-class support vector machine with fitcsvm. The one-class SVM is trained on normal images, and negative classification scores indicate anomalies. In the example below, the trained model correctly identified the four hex nuts with surface defects. Try it in this example.

Examples and How To

Software Reference

See also: Statistics and Machine Learning Toolbox™, Predictive Maintenance Toolbox™, Image Processing Toolbox™, Deep Learning Toolbox™, autoencoders, Feature Selection, Feature Engineering, Machine Learning Models, What Is Machine Learning?, Support Vector Machine (SVM), Predictive Maintenance, Condition Monitoring, Computer Vision