Calibration and Sensor Fusion

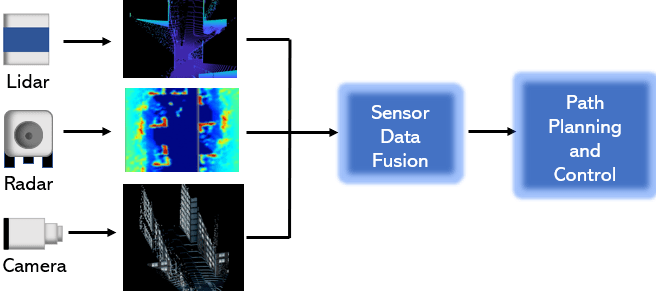

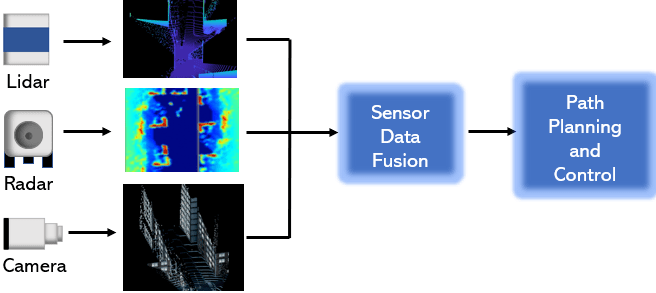

Most modern autonomous systems in applications such as manufacturing, transportation, and construction, employ multiple sensors. Sensor Fusion is the process of bringing together data from multiple sensors, such as radar sensors, lidar sensors, and cameras. The fused data enables greater accuracy because it leverages the strengths of each sensor to overcome the limitations of the others.

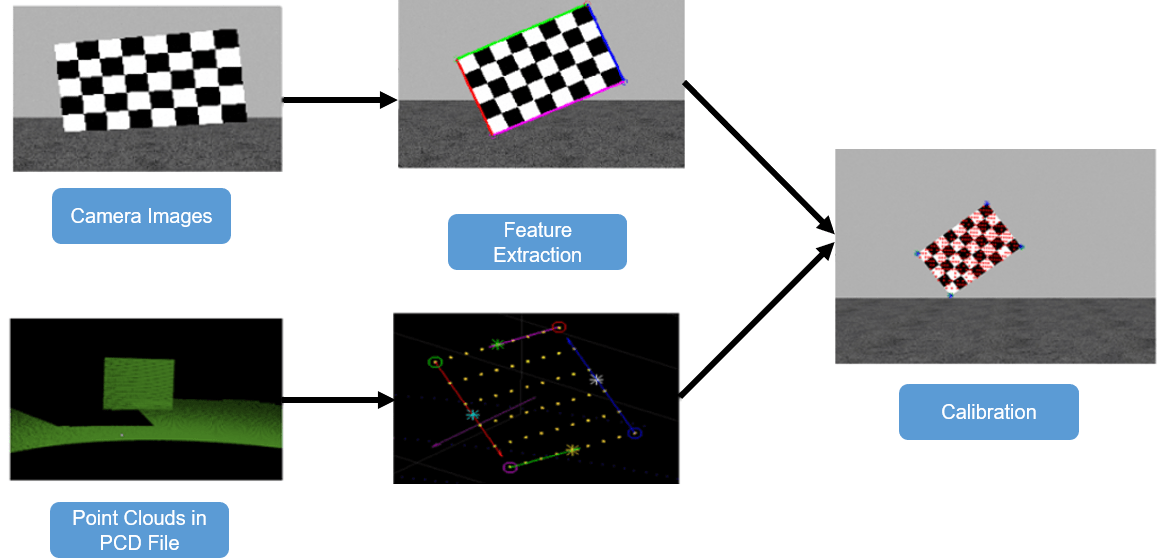

To understand and correlate the data from individual sensors, you must develop a geometric correspondence between them. Calibration is the process of developing this correspondence. Use Lidar Toolbox™ functions to perform lidar-camera calibration. To get started, see What Is Lidar-Camera Calibration?

You can also interactively calibrate the sensors by using the Lidar Camera Calibrator app. For more information, see Get Started with Lidar Camera Calibrator.

Lidar Toolbox also supports downstream workflows such as projecting lidar points on images, fusing color information in lidar point clouds, and transferring bounding boxes from camera data to lidar data.

Apps

| Lidar Camera Calibrator | Interactively establish correspondences between lidar sensor and camera to fuse sensor data (Since R2021a) |

Functions

Topics

- What Is Lidar-Camera Calibration?

Fuse lidar and camera data.

- Calibration Guidelines

Guidelines to help you achieve accurate results for lidar-camera calibration.

- Coordinate Systems in Lidar Toolbox

Overview of coordinate systems in Lidar Toolbox.

- Get Started with Lidar Camera Calibrator

Interactively calibrate lidar and camera sensors.

- Read Lidar and Camera Data from Rosbag File

This example shows how to read and save images and point cloud data from a rosbag file.