Transfer Learning with Deep Network Designer

This example shows how to perform transfer learning interactively using the Deep Network Designer app.

Transfer learning is the process of taking a pretrained deep learning network and fine-tuning it to learn a new task. Using transfer learning is usually faster and easier than training a network from scratch. You can quickly transfer learned features to a new task using a smaller amount of data.

Use Deep Network Designer to perform transfer learning for image classification by following these steps:

Open the Deep Network Designer app and choose a pretrained network.

Import the new data set.

Adapt the final layers to the new data set.

Set higher learning rates so that learning is faster in the transferred layers.

Train the network using Deep Network Designer, or export the network for training at the command line.

Extract Data

In the workspace, extract the bat365 Merch data set. This is a small data set containing 75 images of bat365 merchandise, belonging to five different classes (cap, cube, playing cards, screwdriver, and torch).

unzip("MerchData.zip");Select a Pretrained Network

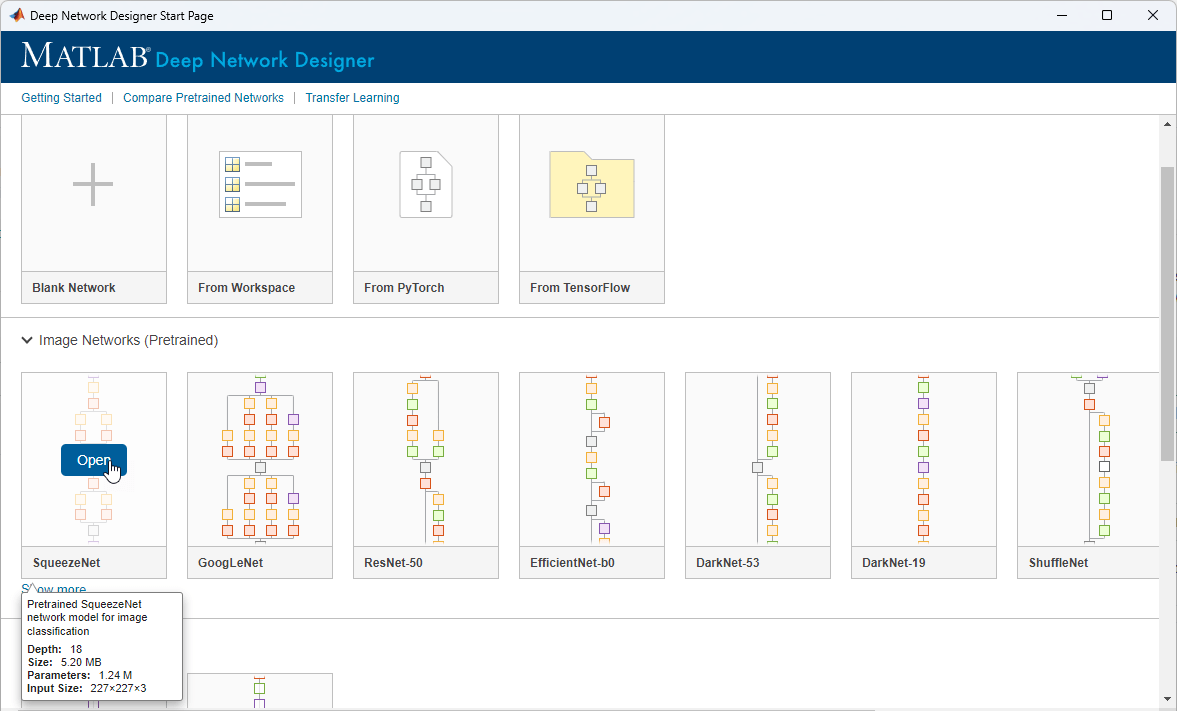

To open Deep Network Designer, on the Apps tab, under Machine Learning and Deep Learning, click the app icon. Alternatively, you can open the app from the command line:

deepNetworkDesigner

Deep Network Designer provides a selection of pretrained image classification networks that have learned rich feature representations suitable for a wide range of images. Transfer learning works best if your images are similar to the images originally used to train the network. If your training images are natural images like those in the ImageNet database, then any of the pretrained networks is suitable. For a list of available networks and how to compare them, see Pretrained Deep Neural Networks.

If your data is very different from the ImageNet data—for example, if you have tiny images, spectrograms, or nonimage data—training a new network might be better. For examples showing how to train a network from scratch, see Create Simple Sequence Classification Network Using Deep Network Designer and Train Simple Semantic Segmentation Network in Deep Network Designer.

SqueezeNet does not require an additional support package. For other pretrained networks, if you do not have the required support package installed, then the app provides the Install option.

Select SqueezeNet from the list of pretrained networks and click Open.

Explore Network

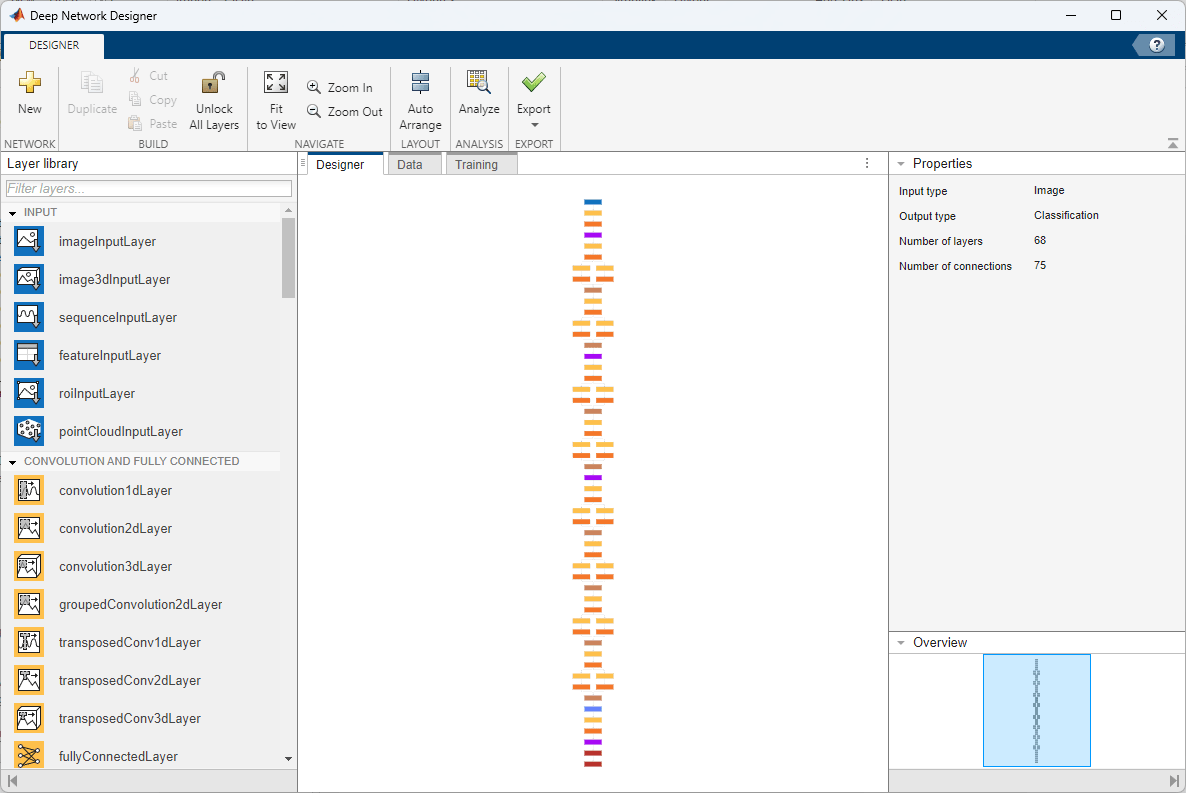

Deep Network Designer displays a zoomed-out view of the whole network in the Designer pane.

Explore the network plot. To zoom in with the mouse, use Ctrl+scroll wheel. To pan, use the arrow keys, or hold down the scroll wheel and drag the mouse. Select a layer to view its properties. Deselect all layers to view the network summary in the Properties pane.

Import Data

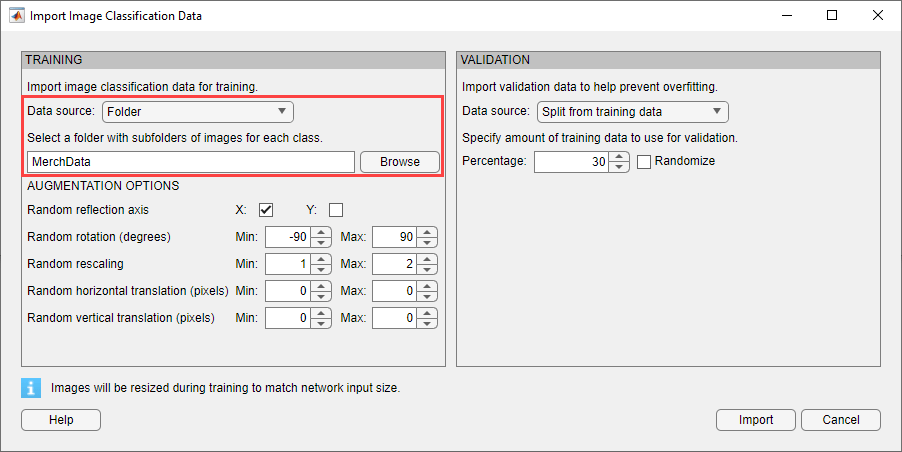

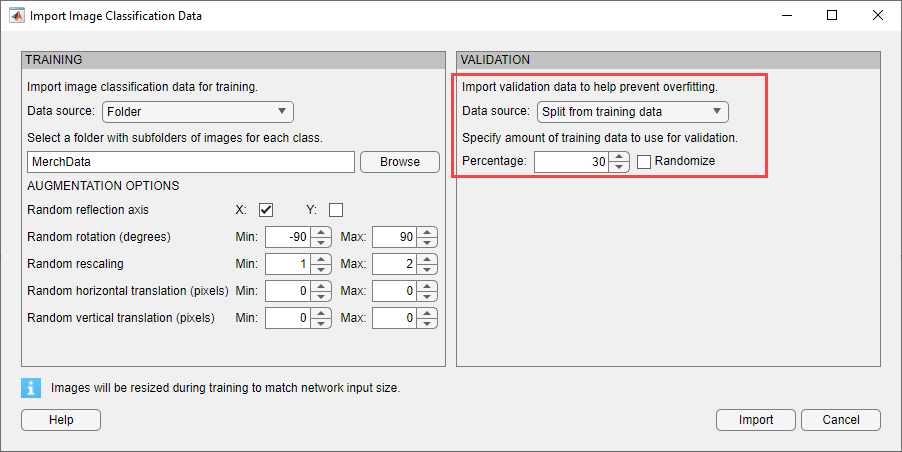

To load the data into Deep Network Designer, on the Data tab, click Import Data > Import Image Classification Data.

In the Data source list, select Folder. Click Browse and select the extracted MerchData folder.

Image Augmentation

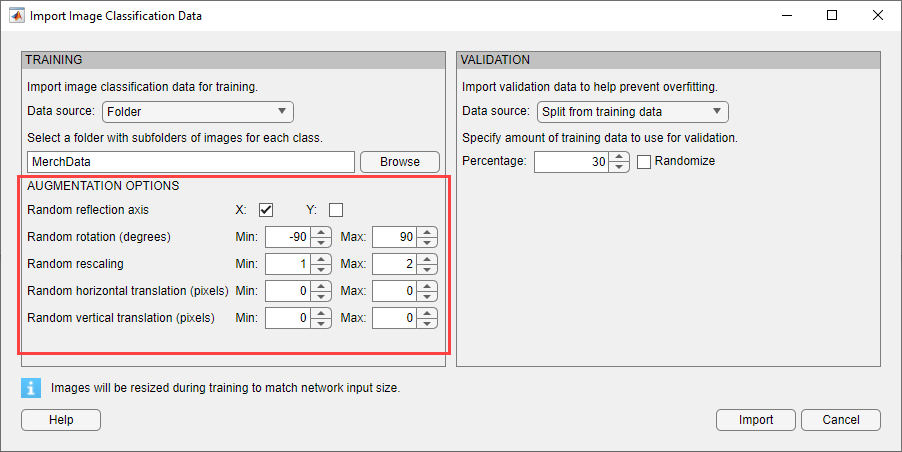

You can choose to apply image augmentation to your training data. The Deep Network Designer app provides the following augmentation options:

Random reflection in the x-axis

Random reflection in the y-axis

Random rotation

Random rescaling

Random horizontal translation

Random vertical translation

You can effectively increase the amount of training data by applying randomized augmentation to your data. Augmentation also enables you to train networks to be invariant to distortions in image data. For example, you can add randomized rotations to input images so that a network is invariant to the presence of rotation in input images.

For this example, apply a random reflection in the x-axis, a random rotation from the range [-90,90] degrees, and a random rescaling from the range [1,2].

Validation Data

You can also choose validation data either by splitting it from the training data, known as holdout validation, or by importing it from another source. Validation estimates model performance on new data compared to the training data, and helps you to monitor performance and protect against overfitting.

For this example, use 30% of the images for validation.

Click Import to import the data into Deep Network Designer.

Visualize Data

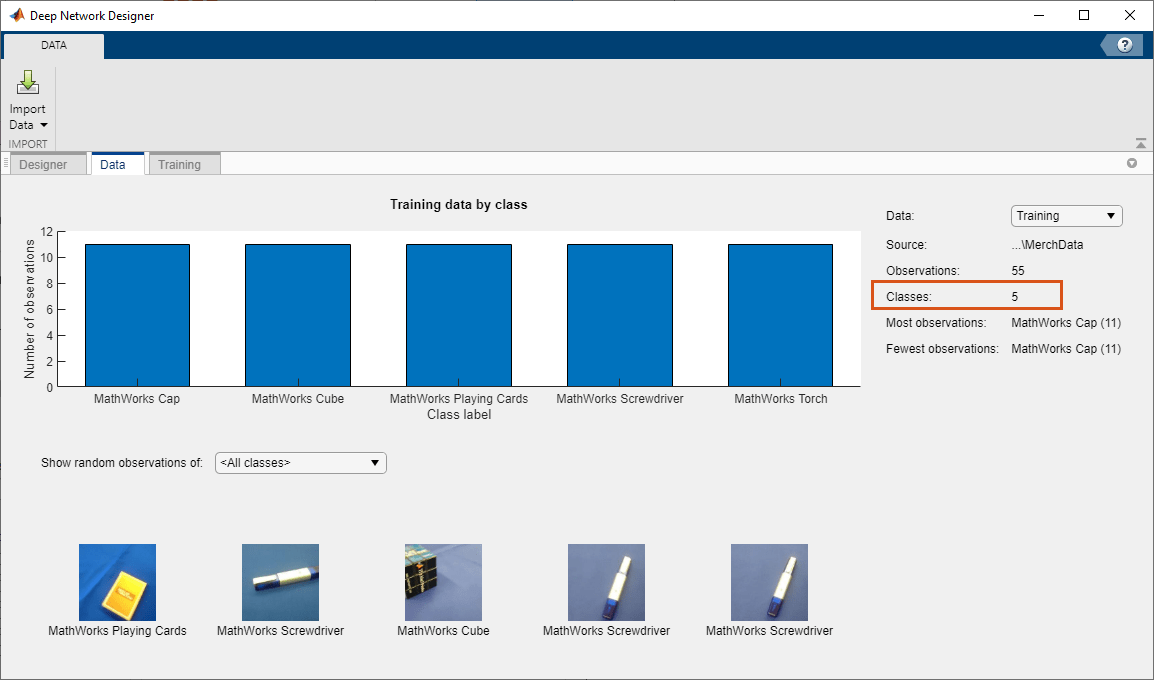

Using Deep Network Designer, you can visually inspect the distribution of the training and validation data in the Data tab. You can see that, in this example, there are five classes in the data set. You can also see random observations from each class.

Prepare Network for Training

Edit the network in the Designer pane to specify a new number of classes in your data. To prepare the network for transfer learning, edit the last learnable layer and the final classification layer.

Edit Last Learnable Layer

To use a pretrained network for transfer learning, you must change the number of classes to match your new data set. First, find the last learnable layer in the network. For SqueezeNet, the last learnable layer is the last convolutional layer, 'conv10'. Select the 'conv10' layer. At the bottom of the Properties pane, click Unlock Layer. In the warning dialog that appears, click Unlock Anyway. This unlocks the layer properties so that you can adapt them to your new task.

Before R2023b: To edit the layer properties, you must replace the layers instead of unlocking them. In the new convolutional 2-D layer, set the FilterSize to [1 1].

The NumFilters property defines the number of classes for classification problems. Change NumFilters to the number of classes in the new data, in this example, 5.

Change the learning rates so that learning is faster in the new layer than in the transferred layers by setting WeightLearnRateFactor and BiasLearnRateFactor to 10.

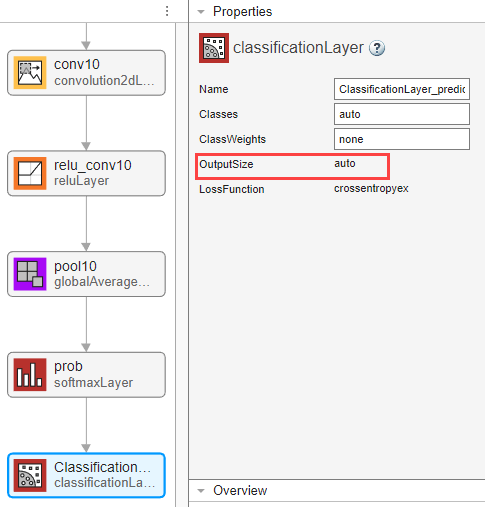

Edit Output Layer

Next, configure the output layer. Select the final classification layer, ClassificationLayer_predictions, and click Unlock Layer and then click Unlock Anyway. For the unlocked output layer, you do not need to set the OutputSize. At training time, Deep Network Designer automatically sets the output classes of the layer from the data.

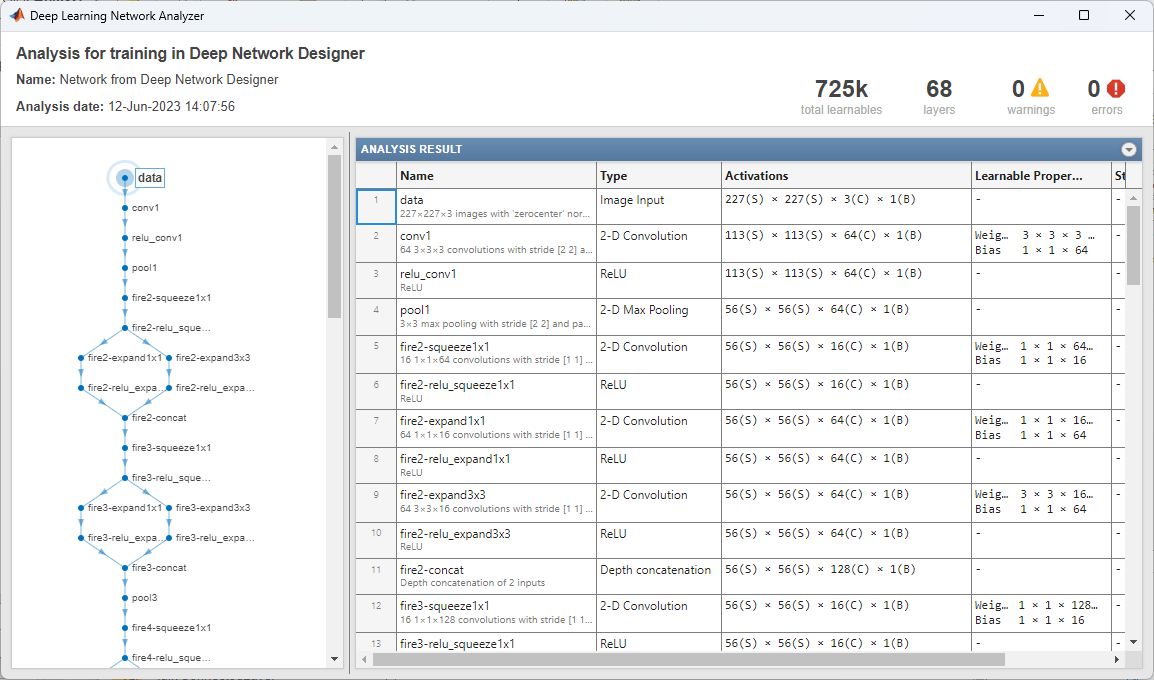

Check Network

To check that the network is ready for training, click Analyze. If the Deep Learning Network Analyzer reports zero errors, then the edited network is ready for training.

Train Network

In Deep Network Designer you can train networks imported or created in the app.

To train the network with the default settings, on the Training tab, click Train. The default training options are better suited for large data sets, for small data sets reduce the mini-batch size and the validation frequency.

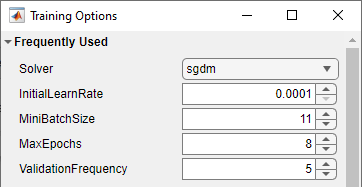

If you want greater control over the training, click Training Options and choose the settings to train with.

Set the initial learn rate to a small value to slow down learning in the transferred layers.

Specify validation frequency so that the accuracy on the validation data is calculated once every epoch.

Specify a small number of epochs. An epoch is a full training cycle on the entire training data set. For transfer learning, you do not need to train for as many epochs.

Specify the mini-batch size, that is, how many images to use in each iteration. To ensure the whole data set is used during each epoch, set the mini-batch size to evenly divide the number of training samples.

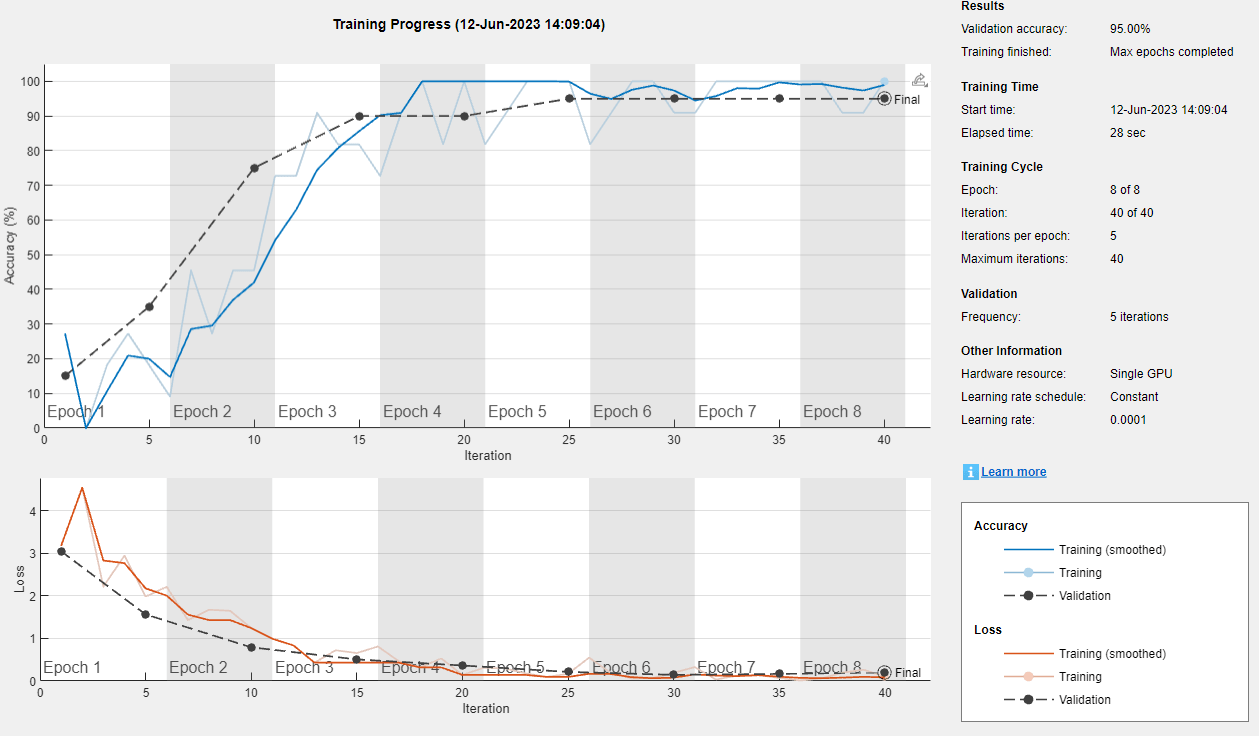

For this example, set InitialLearnRate to 0.0001, MaxEpochs to 8, and ValidationFrequency to 5. As there are 55 observations, set MiniBatchSize to 11 to divide the training data evenly and ensure you use the whole training data set during each epoch. For more information on selecting training options, see trainingOptions.

To train the network with the specified training options, click OK and then click Train.

Deep Network Designer allows you to visualize and monitor training progress. You can then edit the training options and retrain the network, if required.

To save the training plot as an image, click Export Training Plot.

Export Results and Generate MATLAB Code

To export the network architecture with the trained weights, on the Training tab, select Export > Export Trained Network and Results. Deep Network Designer exports the trained network as the variable trainedNetwork_1 and the training info as the variable trainInfoStruct_1.

trainInfoStruct_1

trainInfoStruct_1 = struct with fields:

TrainingLoss: [3.1794 4.5479 2.2169 2.9521 1.9841 2.2164 1.4329 1.6750 1.6514 1.2448 0.9932 0.8375 0.4307 0.7229 0.6570 0.8118 0.4625 0.3193 0.5299 0.1430 0.3089 0.2988 0.1780 0.0959 0.1691 0.5496 0.1991 0.0629 0.0672 … ] (1×40 double)

TrainingAccuracy: [27.2727 0 18.1818 27.2727 18.1818 9.0909 45.4545 27.2727 45.4545 45.4545 72.7273 72.7273 90.9091 81.8182 81.8182 72.7273 90.9091 100 81.8182 100 81.8182 90.9091 100 100 100 81.8182 90.9091 100 100 90.9091 … ] (1×40 double)

ValidationLoss: [3.0507 NaN NaN NaN 1.5660 NaN NaN NaN NaN 0.7928 NaN NaN NaN NaN 0.5077 NaN NaN NaN NaN 0.3644 NaN NaN NaN NaN 0.2133 NaN NaN NaN NaN 0.1448 NaN NaN NaN NaN 0.1662 NaN NaN NaN NaN 0.2037]

ValidationAccuracy: [15 NaN NaN NaN 35 NaN NaN NaN NaN 75.0000 NaN NaN NaN NaN 90 NaN NaN NaN NaN 90 NaN NaN NaN NaN 95 NaN NaN NaN NaN 95 NaN NaN NaN NaN 95 NaN NaN NaN NaN 95]

BaseLearnRate: [1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 1.0000e-04 … ] (1×40 double)

FinalValidationLoss: 0.2037

FinalValidationAccuracy: 95

OutputNetworkIteration: 40

You can also generate MATLAB code, which recreates the network and the training options used. On the Training tab, select Export > Generate Code for Training. Examine the MATLAB code to learn how to programmatically prepare the data for training, create the network architecture, and train the network.

Classify New Image

Load a new image to classify using the trained network.

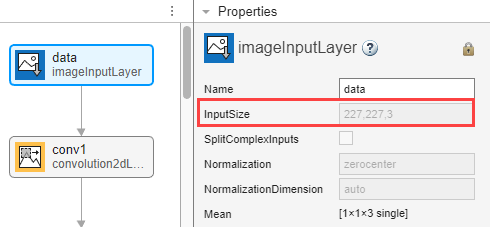

I = imread("MerchDataTest.jpg"); Deep Network Designer resizes the images during training to match the network input size. To view the network input size, go to the Designer pane and select the imageInputLayer (first layer). This network has an input size of 227-by-227.

Resize the test image to match the network input size.

I = imresize(I, [227 227]);

Classify the test image using the trained network.

[YPred,probs] = classify(trainedNetwork_1,I); imshow(I) label = YPred; title(string(label) + ", " + num2str(100*max(probs),3) + "%");