Verifying Models and Code for High-Integrity Systems

By Bill Potter, bat365

Software testing is one of the most demanding and time-consuming aspects of developing complex systems because it involves ensuring that all requirements are tested and that all software is exercised by the testing. Using a sensor voting algorithm in a helicopter flight control system as an example, this article describes a streamlined verification workflow based on Simulink® and supporting verification tools. Topics covered include creating test cases from requirements, reusing those test cases for models and code, generating test cases for missing model coverage, and achieving full structural coverage on the executable object code.

Helicopter Control System Requirements and Design

Development of any complex system begins with requirements. Typically provided in a textual document or using a requirements management tool, they become the basis for system design and verification. Requirements usually also have some type of tagging information to allow for tracking and linking of the requirements.

In the helicopter control system example, we’ll be using requirements related to voting of the attitude/heading reference system (AHRS). These are the five requirements of interest:

HLR_9 AHRS Validity Check

Prior to using the data from an AHRS, the flight control software shall verify the AHRS data is valid.

HLR_10 AHRS Input Signal Processing

The flight control computer hardware processes three AHRS digital bus inputs.

The characteristics of the AHRS inputs to the software from each of the three sensors are defined in the following table.

| Signal |

Input Sign |

Input Range |

| AHRS Valid |

N/A |

1 = Valid 0 = Invalid |

| Pitch Attitude |

Up = + |

+/- 90 degrees |

| Roll Attitude |

Right = + |

+/- 180 degrees |

| Pitch Body Rate |

Up = + |

+/- 60 deg/sec |

| Yaw Body Rate |

Right = + |

+/- 60 deg/sec |

HLR_11 AHRS Voting for Triple Sensors

When three AHRSs are valid, the flight control computer shall use the middle value of the three sensors for each of the individual parameters from the AHRSs.

HLR_12 AHRS Voting for Dual Sensors

When only two AHRSs are valid, the flight control computer shall use the average of the two sensors for each of the individual parameters from the AHRSs.

HLR_13 AHRS Usage of Single Sensor

When only one AHRS is valid, the flight control computer shall use the individual parameters from that AHRS.

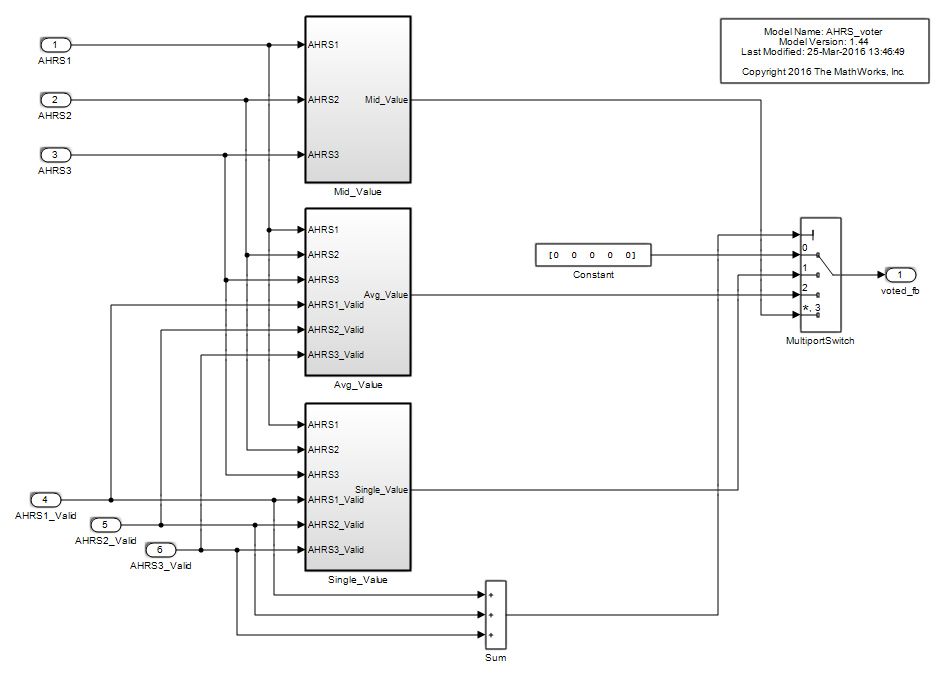

We create a Simulink model to implement these requirements (Figure 1).

Verification by Simulation

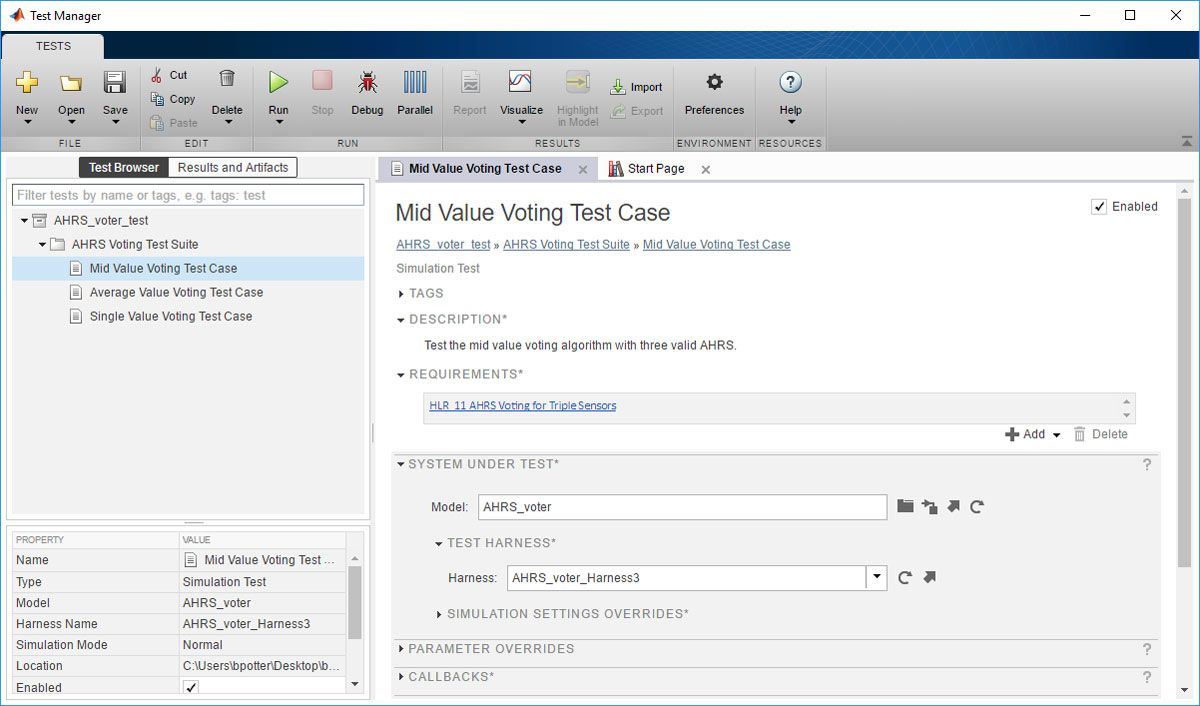

To verify the design against the software requirements we use the Test Manager in Simulink Test™ to link the test cases to simulation test harnesses created in Simulink (Figure 2).

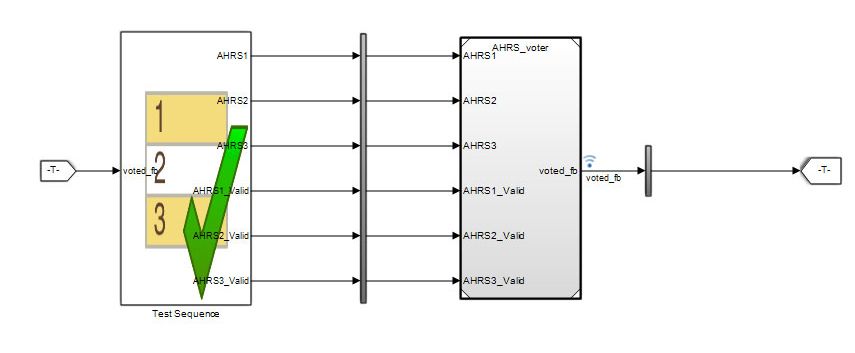

We implement three test cases for the AHRS voting algorithm, one for each voting requirement. The case shown in Figure 3, for testing the three valid sensors, traces to HLR 11 AHRS Voting for Triple Sensors in the requirements document. Figure 3 shows the corresponding test harness, a Test Sequence block driving the component under test.

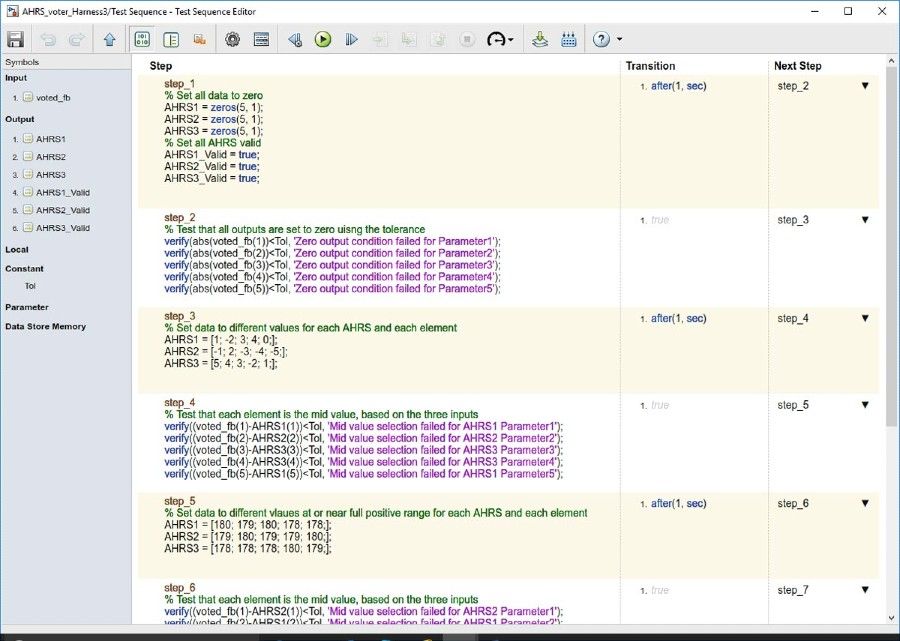

The Test Sequence block in the harness contains the tests that we created based on the requirements. We can use it to define test steps with input data for the model under test, as well as expected result data for the test. There is a Verify function in the test sequence language for evaluating pass or fail for each test step (Figure 4).

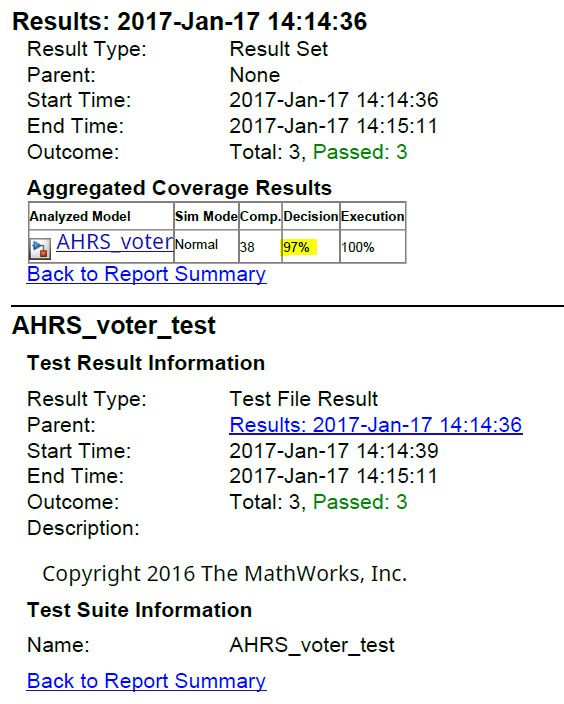

The other test cases in the Test Manager have additional test harnesses and Test Sequence blocks for their respective requirements. We run the simulations from the Test Manager; Simulink Test automatically produces a report of the results. During simulation, model coverage is measured by Simulink Coverage™ to identify how thoroughly the model has been covered during simulation. The summary section of the generated report for the three test cases, including a summary of the model coverage assessment, is shown in Figure 5.

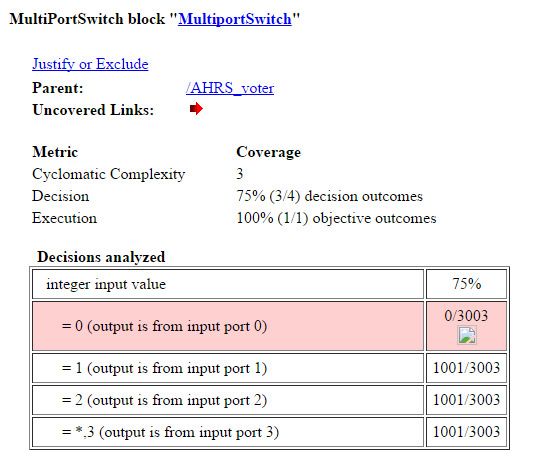

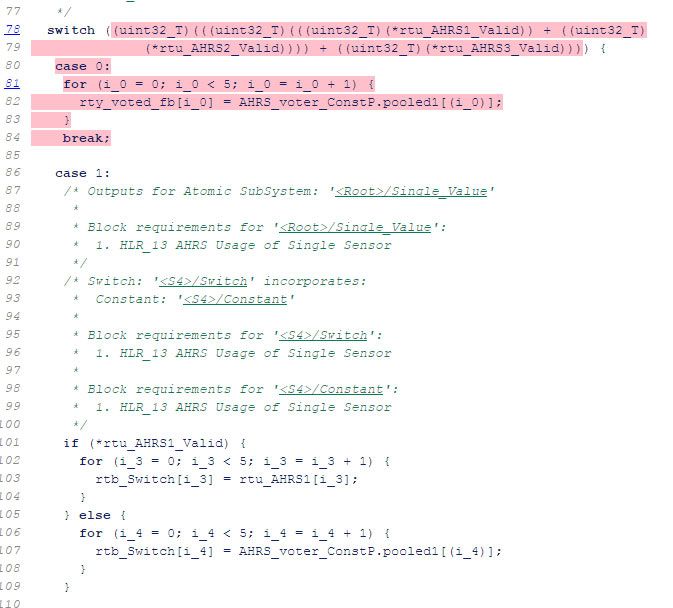

The report shows that all three test cases passed, but model coverage is not quite complete. While all the blocks in the model were executed, only 97% of decisions in the model were taken. The detailed model coverage report shows that the first missing decision is for the Multiport Switch block; the input condition of 0 was never tested (Figure 6).

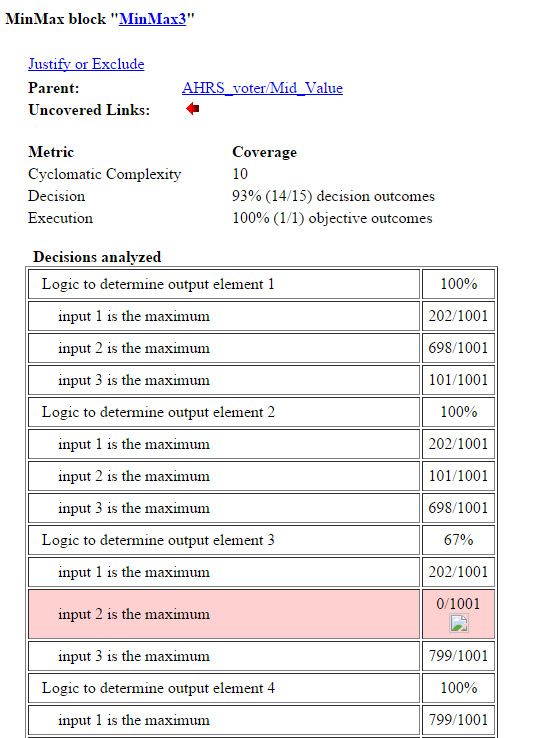

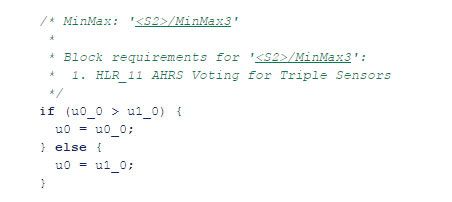

A second missing decision exists for a MinMax block in the mid value voting subsystem (Figure 7).

These missing decisions need to be addressed, because they will result in missing code coverage when we test the software. Safety standards such as DO-178C and ISO 26262 require full code coverage during testing.

Supplementing Simulation Cases

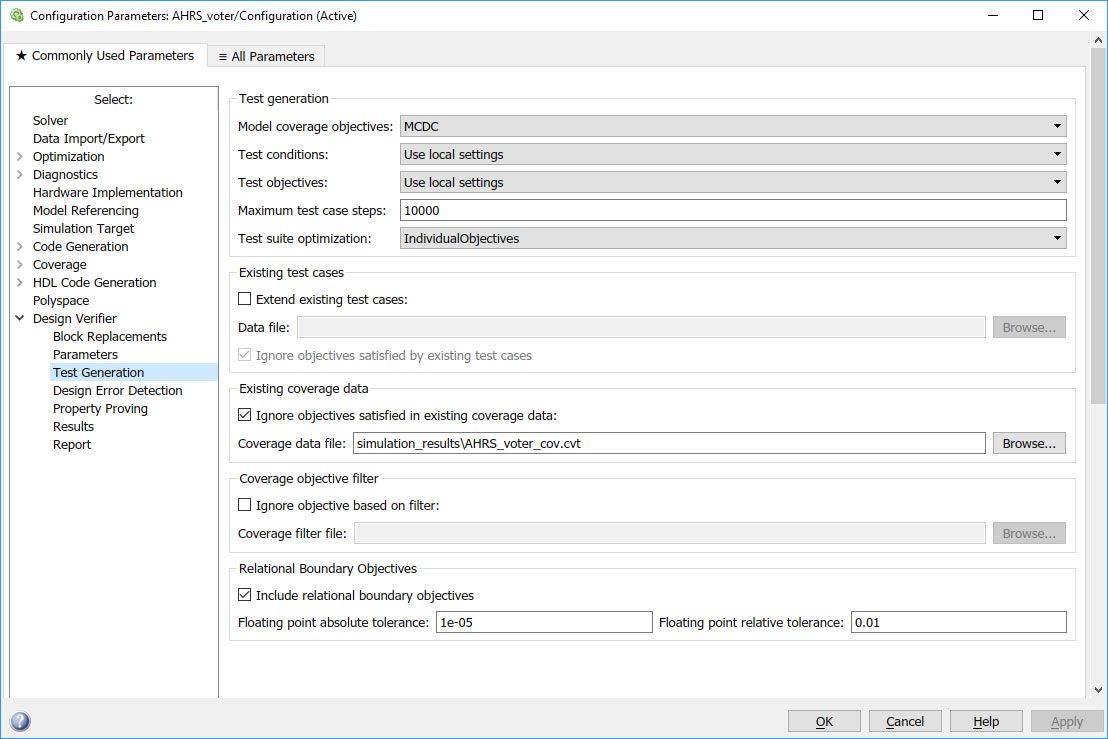

We can automatically generate test cases for the missing coverage by importing the coverage data into Simulink Design Verifier™ and having it ignore the model coverage objectives satisfied by the previous simulations (Figure 8).

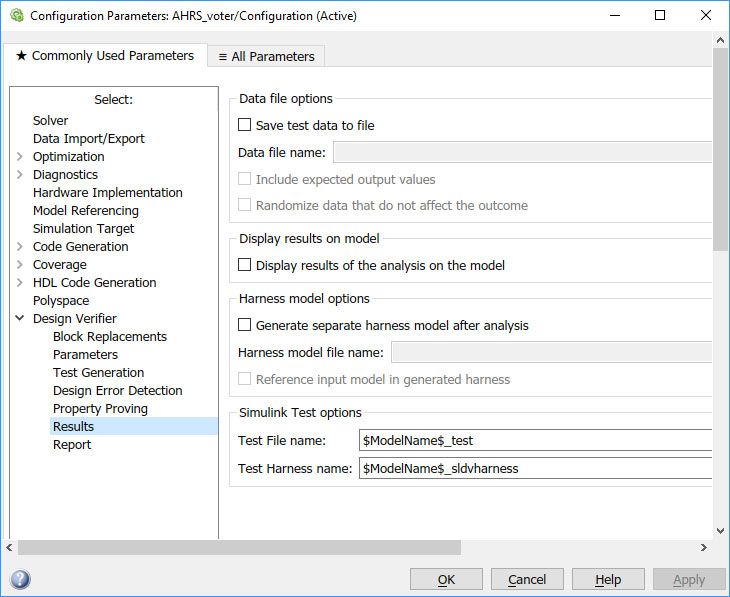

We set up Simulink Design Verifier to export the generated test cases into a Simulink Test file with a test harness, using the settings shown in Figure 9.

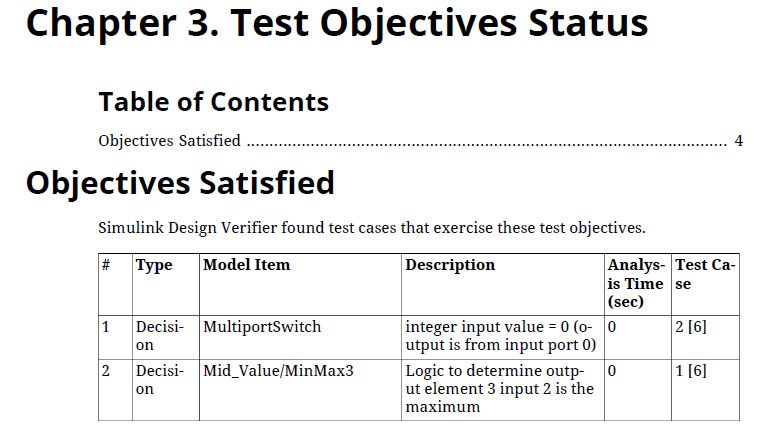

Once we execute the test cases and export them, a test generation report is produced that describes the generated test cases and the model objectives covered. Figure 10 shows a section from the report indicating that the Multiport Switch input 0 and the MinMax element 3 of input 2 is the maximum.

We should now have all the test cases needed to fully test software.

Testing the Software and Measuring Code Coverage

We generate code from the AHRS_voter model using Embedded Coder® and compile it into an executable using the target compiler. We are now ready to test the compiled software and measure the code coverage. We do this in two steps, first by repeating the simulation cases for the requirements document, and second by running the automatically generated test cases from Simulink Design Verifier on the generated and compiled software. This approach allows us to detect any errors injected by the compiler or the target hardware.

We set up a software test environment using the processor-in-the-loop (PIL) capability provided with Embedded Coder. An API in Embedded Coder allows Simulink running on a host computer to download the software to an external hardware development board and communicate with that software to execute it. The API can be set up to communicate directly with the board or to communicate through an integrated development environment (IDE) that connects to the board. It is a best practice to use the same CPU part number on the board that is used in the final target hardware and to use the same compiler and optimization settings as on the final target hardware.

The Embedded Coder API provides interface code on the host computer that allows Simulink to send input data to a model reference block down to the board running the software for that block and then read back the output of the software at the output of the block for each time step. In effect, Simulink is emulating the call to the code function. This feature can be used for a single model reference block or for a hierarchy of model reference blocks. The code running in PIL can be instrumented for code coverage using Simulink Coverage or a third-party tool such as LDRA or VectorCAST®. Code profiling can also be done to measure execution time frame by frame.

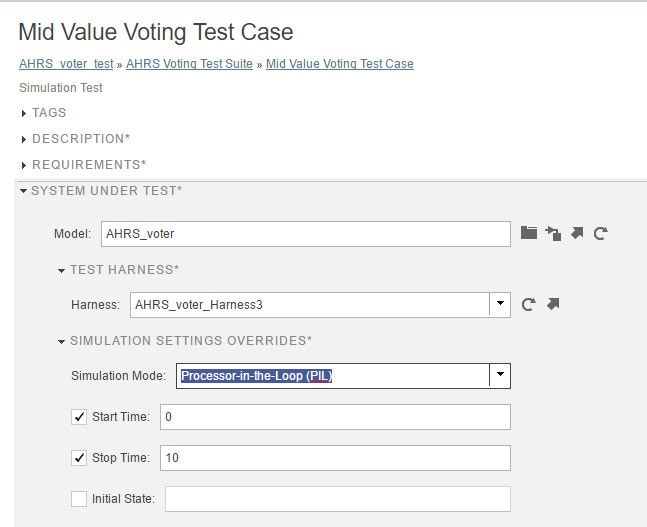

Now we can rerun the simulation tests by simply switching the mode of the model under test within the Test Manager to PIL (Figure 11).

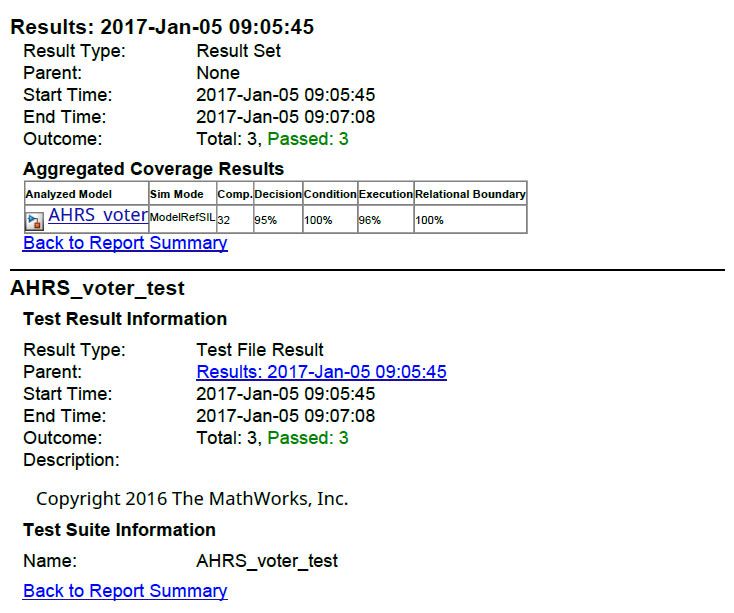

When the tests are run on the software, we get a test results report very much like the simulation results report that we ran previously. Figure 12 shows the test results for the software.

While the three software test cases pass, just like the simulation cases, the coverage results are slightly different for the software. The decision and execution coverage is less for the software than for the model, and the code coverage indicates condition and relational boundary coverage, which were not in the model. These results are not due to different functionality, but to differences between the code and the model semantics.

The code coverage report clarifies some of these similarities and differences. The software implements the Multiport Switch in the model with Switch-Case statements, and we can see that Case 0, which corresponds to the switch input of 0, is not tested in the code. This is expected because the model simulation did not cover the switch control input set to 0, but this test case also includes a line of code that is not executed, resulting in less than 100% statement, or execution, coverage. This missing coverage in the code is highlighted in the coverage report shown in Figure 13.

Another difference involves the MinMax3 block. This block was missing coverage for element 3 in the model, but there is no indication that coverage is missing in the code. It turns out that the code for this block is fully covered because the code implements the vector operation inside a for loop (Figure 14).

In this case, the criteria for test cases to cover the model are more stringent than those to cover the code.

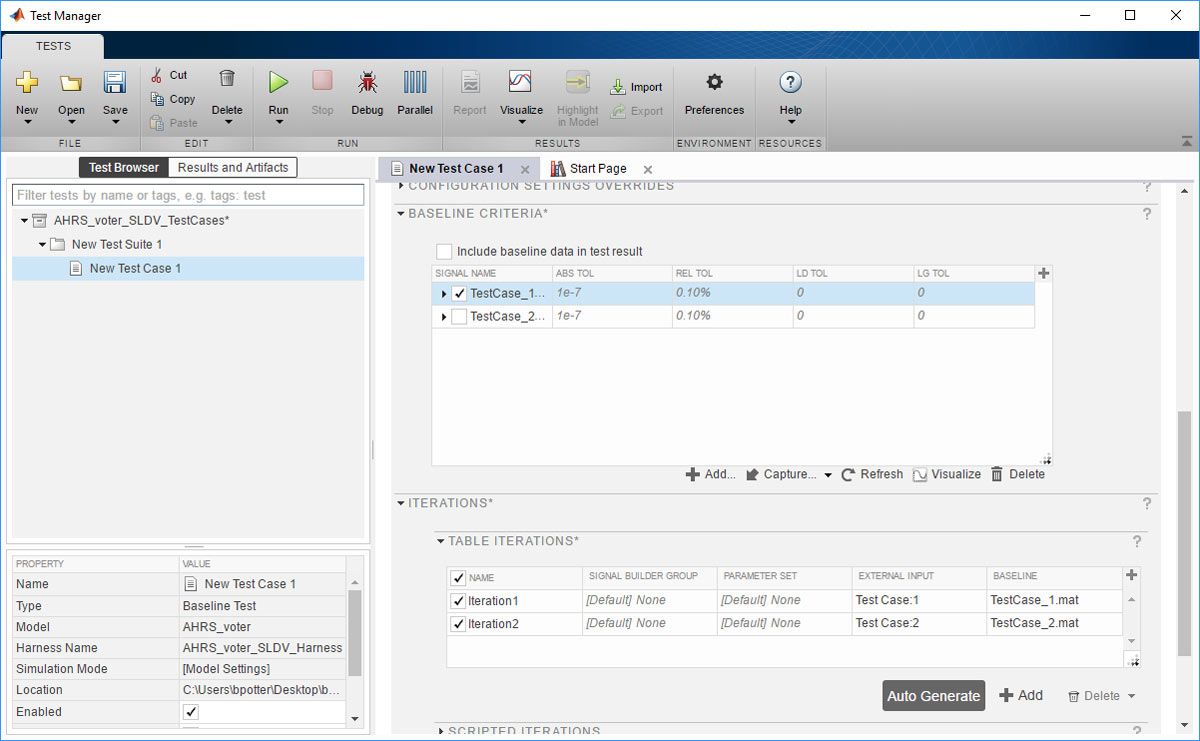

To complete the software testing, we run the Simulink Design Verifier generated test cases and measure coverage. Simulink Design Verifier has generated two test cases, along with expected results, and automatically exported them to Simulink Test so that they can be run using the PIL mode. Figure 15 shows the test iterations in the Test Manager.

We have selected an absolute tolerance of 1e-7 and a relative tolerance of 0.1% for comparing the Simulink model output with the software output. These values are selectable by the user.

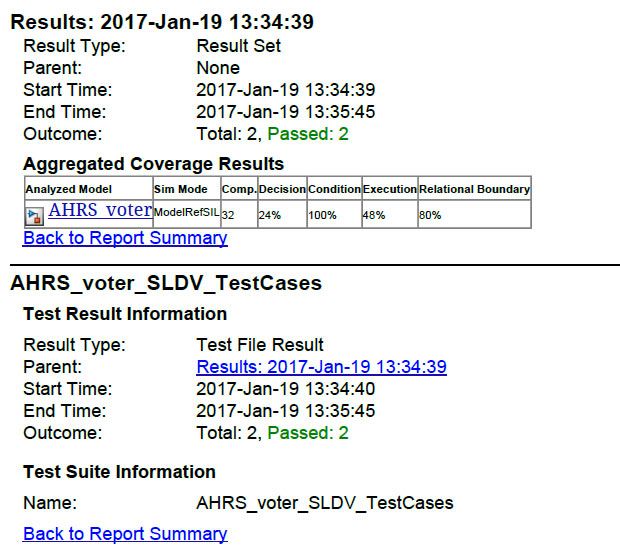

Running these test cases results in a test report and a code coverage report that contains the code coverage summary (Figure 16).

The two test cases pass, as expected, and the code coverage results for these two test cases are relatively low due to their objective to reach coverage only for the Multiport Switch input 0 and the MinMax element 3 of input 2 as the maximum. To get the code coverage for the entire test suite, these two cases plus the three cases run previously, we must merge the coverage data from the two different test runs.

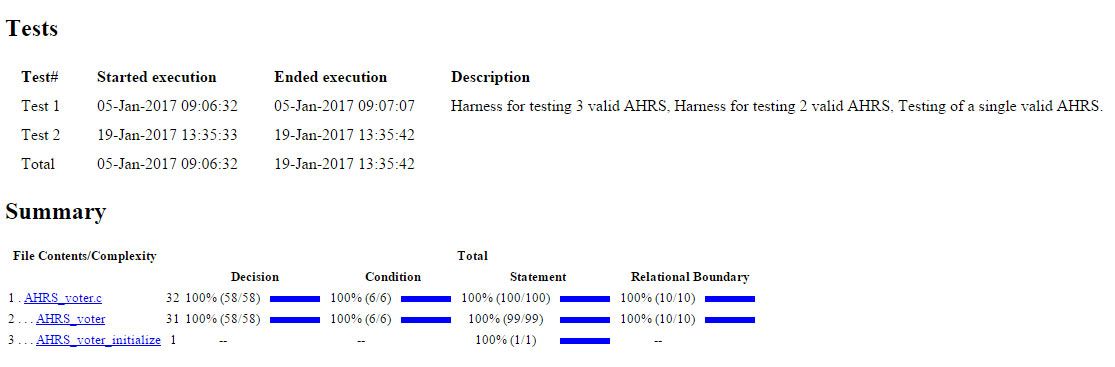

When the two sets of coverage data are merged, we get a report indicating that full structural coverage of the software has been achieved (Figure 17).

Test 1 was the requirements-based tests previously run on the code. Test 2 was the Simulink Design Verifier tests run on the code. The summary results indicate the total coverage achieved by running both sets of tests.

Summary

We have seen how to go from requirements to design to code using a Simulink model. During the process, many of the verification activities were automated to reduce the manual effort required. The result is a software system that has been fully verified against the requirements and has full code coverage.

This example covered only a small portion of a real system. The same process can be extended to cover a complete system such as the complete helicopter flight control system or an autopilot system for a fixed-wing aircraft.

Published 2017 - 93162v00