Continuous Integration for Verification of Simulink Models

By David Boissy, Paul Urban, Krishna Balasubramanian, Pablo Romero Cumbreras, Colin Branch, and Jemima Pulipati, bat365

This is the first article in a two-part series. Part 1 looks at leveraging GitLab® for version control and Jenkins® for continuous integration (CI). Part 2, Continuous Integration for Verification of Simulink Models Using GitLab, looks at using GitLab for both version control and CI.

Continuous integration (CI) is gaining in popularity and becoming an integral part of Model-Based Design. But what is CI? What are its benefits, and what problems does it attempt to solve? How does Simulink® fit into the CI ecosystem? And how can you best leverage CI for your projects?

If you are familiar with Model-Based Design but new to CI, you may be asking yourself these questions. In this technical article, we explore a common CI workflow and apply it to Model-Based Design. Then, we walk through an example of that workflow using Jenkins, GitLab, and Simulink Test™.

The project used in this example is available for download.

What Is CI?

CI is an agile methodology best practice in which developers regularly submit and merge their source code changes into a central repository. These “change sets” are then automatically built, qualified, and released. Figure 1 illustrates this basic CI workflow together with the development workflow.

Figure 1. CI workflow.

In the development part of the workflow, models and tests are developed, verified, merged, reviewed, and submitted to a version control system on the developer desktop. The version control system then triggers the automated CI portion of the workflow. The key parts of the CI workflow are:

Build: Source code and models become object files and executables.

Test: Testing is performed as a quality gate.

Package: Executables, documentation, artifacts, and other deliverables are bundled for delivery to end users.

Deploy: Packages are deployed to the production environment.

Together, these four steps are known as the CI “pipeline.” The pipeline is typically automated, and it can take anywhere from minutes to days to complete, depending on the system. It is worth noting that throughout these steps, numerous artifacts are created, such as bills of materials, test results, and reports.

CI workflows are often paired with developer workflows related to version control systems. In these workflows, developers often keep their changes in local repositories and use a local CI pipeline to qualify their changes prior to deployment.

What Are the Benefits of CI?

Teams that have implemented CI typically report the following benefits:

- Repeatability. The CI pipeline provides a consistent and repeatable automated process for building, testing, packaging, and deployment. Repeatable automation allows developers to focus on necessary work and save time on a project. It is also an important aspect of risk reduction and is often a requirement for certification.

- Quality assurance. Manual testing is effective, but it is often based on days-old snapshots and lacks repeatability. With CI, changes are always tested against the most recent code base.

- Reduced development time. Repeatable processes with built-in quality assurance led to faster delivery of high-quality products. Automated deployment means that your code is always production ready.

- Improved collaboration. With CI, developers have a defined process for managing change sets and merging their code into the production line. Consistent processes make managing large teams possible and reduce the cost of ramping up new developers.

- Audit-ready code. The CI workflow provides an extensive audit trail. For every change making its way through the CI pipeline, it’s possible to identify who made the change, who reviewed it, and the nature of the change, as well as dependencies, tests and their results, and any number of related reports and artifacts generated along the way.

How Does Model-Based Design Fit into CI?

By design, the CI workflow and tools are language- and domain-neutral. This means that the challenge is to teach CI tools, systems, and processes to speak Model-Based Design—in other words, to make Simulink® and related tools the lingua franca of the CI workflow.

This can be done by integrating three key components of Model-Based Design into the CI workflow: verification, code generation, and testing (Figure 2). Model-Based Design emphasizes early verification, which maps to the CI pipeline with a Verify phase before the Build phase. Code generation takes place in the Build phase. Dynamic testing through simulation and static analysis of generated code can be done in the Test phase.

Figure 2. Model-Based Design mapped to CI pipeline.

Here is an overview of how we teach the CI workflow to speak Model-Based Design:

Develop. MATLAB®, Simulink, coders, and toolboxes are used for development activities. MATLAB Projects are used to organize work, collaborate, and interface with version control systems.

Test. Simulink Check™ is used to perform model quality checks before simulation and code generation. Simulink Test is used to develop, manage, and execute simulation-based tests. Simulink Coverage™ is used to measure coverage and assess test effectiveness. The quality checks, test results, and coverage metrics can then be used as a quality gate for developers to qualify their work.

Merge. The Compare Files and Folders feature of MATLAB is used to compare and merge MATLAB files. The Model Comparison Tool is used to compare and merge Simulink models.

Review. Review is the final step in the quality process before changes are submitted to the version control system. Changes to MATLAB scripts and Simulink models are reviewed here. Test results from prequalification are also reviewed as a final quality gate prior to submission.

Submit. MATLAB projects provide an interface to version control systems.

Verify. Simulink Check, the same tool used for local verification, is used for automated verification within the CI system.

Build. MATLAB Coder™, Simulink Coder™, and Embedder Coder® are used to generate code for software-in-the-loop (SIL) testing.

Test. Simulink Test, the same tool used for local testing, is used for automated testing within the CI system.

Package and deploy. Packaging is where executables, documentation, artifacts, and other deliverables are bundled up for delivery to end users. Deployment is the release of the packaged software. In workflows for Model-Based Design, these phases vary widely among organizations and groups, and often involve bundling different builds and certification artifacts into a product ready for delivery to other teams.

Modern development tools and practices enable developers to create more robust systems and test functionality early and often. When a CI system is integrated into the workflow, unit-level testing and system-level testing are automated. This means that the developer can focus on developing new features, not on verifying that features have been integrated correctly.

The following case study describes a workflow that incorporates CI and Model-Based Design.

Case Study: A Simulink Model Verified, Built, and Tested Within a CI System

In this example we use Model-Based Design with CI to perform requirements-based testing on an automotive lane-following system (Figure 3).

The pipeline we’ll be using (Figure 4) is executed with each Jenkins build.

Figure 4. Pipeline for a lane-following example.

The phases in the pipeline are as follows:

- Verify standards compliance: A MATLAB Unit script runs a simple Model Advisor check. The assessment criteria ensure that the model does not have unconnected lines.

- Build model: A MATLAB Unit test file builds production SIL code for our model. The assessment criteria passes if the build succeeds without warning.

- Execute test cases: A test suite in Simulink Test uses several driving scenarios to test the lane-following controller. Three assessment criteria are used to verify satisfactory operation of the controller:

- Collision avoidance: The ego car does not collide with the lead car at any point during the driving scenario.

- Safe distance maintenance: The time gap between the ego car and the lead car is above 1.5 seconds. The time gap between the two cars is defined as the ratio of the calculated headway and the ego car velocity.

- Lane following: The lateral deviation from the centerline of the lane is within 0.2 m.

- Package artifacts: Each of the previous stages produces artifacts, including a Model Advisor report, a generated executable, and a set of test results that can be archived for future use or reference.

Workflow Steps

The workflow consists of the following steps (Figure 5):

- Trigger a build in Jenkins and observe that the Verify and Build stages pass.

- Detect a test case failure in Jenkins.

- Reproduce the issue on our desktop MATLAB.

- Fix the issue in the model by relaxing the assessment criteria.

- Test locally to ensure the test case passes.

- Merge and review the changes on the testing branch.

- Commit the change to Git and trigger a build in Jenkins.

- Verify, build, and test in Jenkins.

Figure 5. Example workflow.

Our first failed pass through the CI loop is illustrated at the top left. It shows the CI test failure, local reproduction, criteria relaxing, and successful completion of the CI workflow.

Workflow Details

- We begin by triggering a build in Jenkins by selecting Build Now. The Simulink Check checks and code generation pass.

- Next, we detect a test case failure in the second verify phase. The test case

LFACC_Curve_CutInOut_TooClosein test suiteLaneFollowingTestScenariosfails the assessment criteria.

- To understand the failure better, we reproduce the failure locally using Simulink Test. We open test file

LaneFollowingTestScenarios.mldatxand run test caseLFACC_Curve_CutInOut_TooClose. Note that it fails the Safe Distance assessment criteria. More flexibility in establishing the time gap between the lead car and ego car is required.

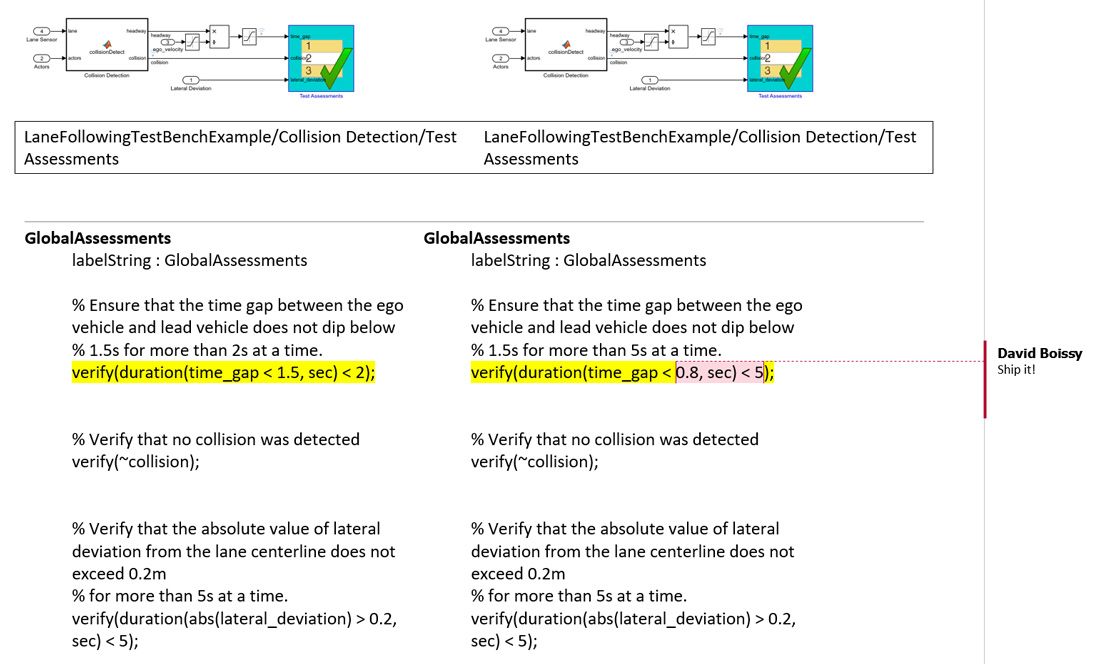

- With an understanding of the problem, we now fix the issue. We open the

LaneFollowingTestBenchExample.slxmodel and navigate to the Collision Detection/Test Assessments Test Sequence block. The first assessment asserts that the time gap between the ego and the lead car should not dip below 1.5 seconds for more than 2 seconds at a time.

GlobalAssessments % Ensure that the time gap between the ego vehicle and lead vehicle does not dip below % 1.5s for more than 2s at a time. verify(duration(time_gap < 1.5, sec) < 2); % Verify that no collision was detected verify(~collision); % Verify that the absolute value of lateral deviation from the lane centerline does not exceed 0.2m % for more than 5s at a time. verify(duration(abs(lateral_deviation) > 0.2, sec) < 5);

This assessment is too restrictive for the aggressive driving maneuver being tested. For the purposes of this example, we relax the assessment criteria to ensure that the time gap does not dip below 0.8 seconds for more than 5 seconds at a time.

GlobalAssessments % Ensure that the time gap between the ego vehicle and lead vehicle does not dip below % 0.8s for more than 5s at a time. verify(duration(time_gap < 0.8, sec) < 5); % Verify that no collision was detected verify(~collision); % Verify that the absolute value of lateral deviation from the lane centerline does not exceed 0.2m % for more than 5s at a time. verify(duration(abs(lateral_deviation) > 0.2, sec) < 5);

- The issue appears fixed in our simulation. To confirm, we test locally, saving the model and rerunning the test in the test manager. Notice that it passes with the new assessment criteria.

- We have fixed the issue and verified locally. We now use the Model Comparison tool to review the changes before committing them to version control.

We could also use the Publish feature of the Model Comparison tool to review the code.

- With the bug fixed, we push these changes to GitLab with MATLAB projects, adding a commit message to note the change to the assessment criteria.

We then note the latest commit in GitLab.

GitLab automatically triggers a build in Jenkins. The Jenkins Project dashboard shows the build status and progress.

- The Jenkins build runs. We see that the verify, build, and test pipeline phases now pass.

We can now start a merge request to merge the changes in the test branch into the master branch. In GitLab, under Repository we select Branches then click Merge request next to the latest commit on the test branch.

We complete the form and submit the merge request.

As the owners of the branch, we can accept the merge request by clicking the Merge button. All changes are now captured on the main branch.

Using the Example: Tools, Resources, and Requirements

The following sections outline resources to help you get started, the tools you will require, and how they should be configured.

Configuring Systems

Jenkins is leveraged as our CI system and GitLab as our version control system. MATLAB, Jenkins, and GitLab must be configured to work together. The following tutorials will help with setup.

Configure GitLab to Trigger Jenkins

The tutorials are specific to GitLab and Jenkins, but the concepts can apply to other version control and CI systems.

Tools Required

The following tools are required for this example:

- Jenkins installation version 2.7.3 or later. Jenkins is used for continuous integration.

- MATLAB Plugin for Jenkins version 1.0.3 or later. MATLAB, Simulink, and Simulink Test all leverage this plugin to communicate with Jenkins. Learn more on GitHub.

- Additional plugins required:

- GitLab account. GitLab is used for source control and is available as a cloud service. MATLAB Projects include a Git interface for communication with GitLab.

License Considerations for CI

If you plan to perform CI on many hosts or on the cloud, contact continuous-integration@bat365 for help. Note: Transformational products such as bat365® coder and compiler products may require Client Access Licenses (CALs).

Appendix: Configuring MATLAB, GitLab, and Jenkins

Step 1. Configure MATLAB project to use source control

The first step in our example is to configure our project to use source control with GitLab.

- Create a new directory named

MBDExampleWithGitAndJenkins, load the example into it, and open the MATLAB ProjectMBDExampleWithGitAndJenkins.prj. - In GitLab, create a new project that will be the remote repository. Name it

MBDExampleWithGitAndJenkinsand record the URL where it is hosted. - In MATLAB, convert the project to use source control. On the Project tab, click Use Source Control.

Click Add Project to Source Control.

- Click Convert.

- Click Open Project when done.

The project is now under local Git source control.

Step 2. Commit changes and push local repository to GitLab

- On the Project tab, click Remote.

- Specify the URL of the remote origin in GitLab.

Click Validate to ensure the connection to the remote repository is successful and click OK. The project is now configured to push and pull changes with GitLab.

- Click Commit to perform an initial commit.

- Click Push to push all changes from the local repository to the remote GitLab repository.

- Refresh the GitLab dashboard and observe the contents of the MATLAB project.

Step 3: Create testing branch

In this step we create a testing branch for testing and verifying changes before merging with the main branch.

- Click Branches.

- Expand the Branch and Tag Creation section, name the branch “Test,” and click Create.

- Observe Test in the branch browser. From the Test branch click Switch then Close.

- In MATLAB select Push to push these changes to GitLab and observe the Test branch in GitLab.

Step 4: Configure Jenkins to call MATLAB

- Install two required plugins:

- GitLab plugin — This plugin allows GitLab to trigger Jenkins builds and display their results in the GitLab UI.

- MATLAB plugin — This plugin integrates MATLAB with Jenkins and provides the Jenkins interface to call MATLAB and Simulink.

- Select New Item and create a new FreeStyle project named

MBDExampleUsingGitAndJenkins. - Under Source Code Management, enable Git, point Jenkins to our GitLab repository, and enter the Test branch to build. Note: Login or password and GitLab API token are required.

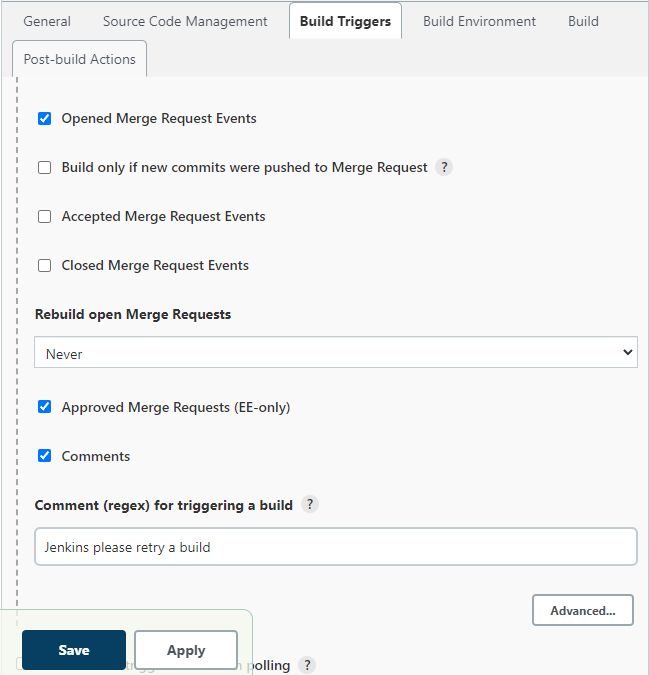

- Configure the build trigger to run a build when a push request is made to the Test branch in GitLab. In the Build Triggers section select Advanced > secret token. This token is used by GitLab to request a build and authenticate with Jenkins. Make a note of the secret token and the GitLab webhook.

- Configure the build environment. Select Use MATLAB version and enter the MATLAB root.

- Configure the build step.

Click Add build step and choose Run MATLAB Command. Enter the command openProject('SltestLaneFollowingExample.prj'); LaneFollowingExecModelAdvisor

to open the project and run model advisor checks.

Click Add build step and choose Run MATLAB Command again. Enter the command: openProject('SltestLaneFollowingExample.prj'); LaneFollowingExecControllerBuild

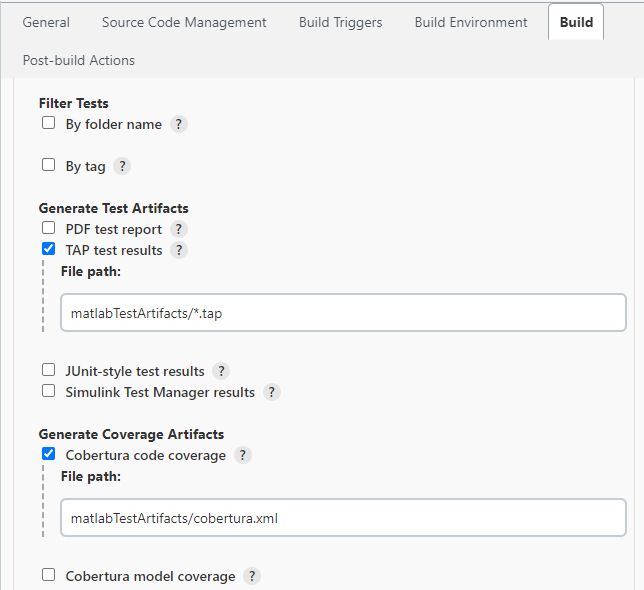

Click Add build step and choose Run MATLAB Tests. Select TAP test results and Cobertura code coverage to complete the build configuration.

This action parses the TAP test results and makes them viewable when TAP Extended Test Results is selected. The output contains an overview of the executed test cases, the results summary, and logs from the MATLAB console.

The TAP plugin also collects the results of the latest test executions and displays a health diagram as shown below. You can access any previous build by clicking on the diagram.

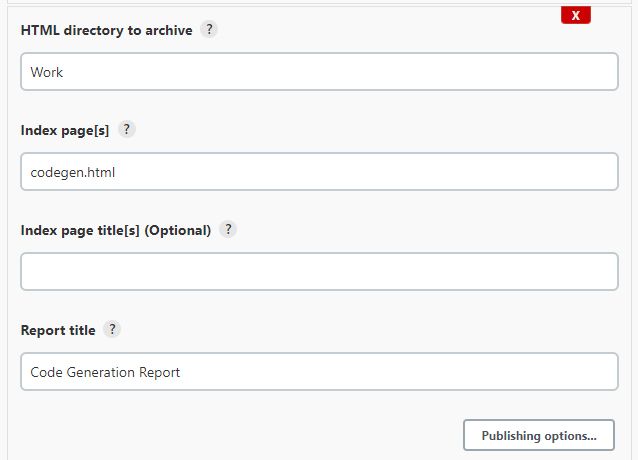

Step 6. Publishing HTML reports

Click Add post-build action > Publish HTML reports. Enter the relative root path where the HTML report will be published and the filename of the index page that is in the path.

Add as many entries as there are HTML reports to be published. In this scenario, there are two web reports: The Model Advisor Summary and the Code Generation Report. These are standard reports created with MATLAB built-in functions. You can add custom HTML reports.

You will find a report link corresponding to the last build for every HTML report on the main Jenkins job page. If you activate the checkbox “Always link to last build” under publishing options, the plugin will publish reports for the last build regardless of the build status. If this checkbox is not activated, the plugin will only link to the last “successful” build.

Step 7. Configuring GitLab to trigger a build in Jenkins

Configure GitLab to trigger an automatic build in Jenkins when a new push occurs on the master branch. To do this, navigate to Settings > Webhooks. Use the webhook URL and the secret token provided by Jenkins in the Build Trigger configuration and select Push events.

Note: Use a fully qualified domain name in the URL section in place of localhost so that GitLab can find the Jenkins installation.

In the Test pulldown, select Push Events to test the integration. GitLab will show the message “Hook executed successfully: HTTP 200” and Jenkins will start a build.

Step 8. Configuring Jenkins-to-GitLab authentication

To publish a Jenkins build status automatically on GitLab, you must configure the Jenkins-to-GitLab authentication.

- Create a personal access token on GitLab with API scope selected.

- Copy the token and create a GitLab connection under Jenkins Configure System.

Note: Connections can be reused over multiple Jenkins jobs and may be configured globally if the user has at least “maintainer” rights.

Step 9. Integrating Jenkins into the GitLab pipeline

To integrate Jenkins into the GitLab pipeline you must configure the GitLab connection in Jenkins and publish the job status to GitLab.

- Select the GitLab Connection in the General section of your Jenkins job.

- Add a post-build action to publish build status to GitLab.

Note: This action has no parameter and will use the existing GitLab connection to publish the build status on GitLab and create bidirectional traceability for every commit and merge request.

Step 10: Visualizing requirements-based testing metrics (R2020b)

Requirements-based testing metrics let you assess the status and quality of your requirements-based testing activities. The metrics results can be visualized using the Model Testing Dashboard.

- Create a file called

collectModelTestingResults.mbased on the function shown below. This function will initialize the metrics engine infrastructure and collect all available model metrics.

function collectModelTestingResults()

% metric capability added in R2020a

if exist('metric')

metricIDs = [...

"ConditionCoverageBreakdown" "CoverageDataService"...

"DecisionCoverageBreakdown" "ExecutionCoverageBreakdown"...

"MCDCCoverageBreakdown" "OverallConditionCoverage"...

"OverallDecisionCoverage" "OverallExecutionCoverage"...

"OverallMCDCCoverage" "RequirementWithTestCase"...

"RequirementWithTestCaseDistribution" "RequirementWithTestCasePercentage"...

"RequirementsPerTestCase" "RequirementsPerTestCaseDistribution"...

"TestCaseStatus" "TestCaseStatusDistribution"...

"TestCaseStatusPercentage" "TestCaseTag"...

"TestCaseTagDistribution" "TestCaseType"...

"TestCaseTypeDistribution" "TestCaseWithRequirement"...

"TestCaseWithRequirementDistribution" "TestCaseWithRequirementPercentage"...

"TestCasesPerRequirement" "TestCasesPerRequirementDistribution"...

];

% collect all metrics for initial reconcile

E = metric.Engine();

execute(E, metricIDs);

end

end

- Add this file to your project and to the path.

- Configure Jenkins to collect metric results by calling the new

collectModelTestingResultsfunction twice. The first call initializes the metrics integration with Simulink Test Manager. The second collects the metrics results using the exported Simulink Test Manager results.- Click Add build step and choose Run MATLAB Command again. Enter the command:

openProject('SltestLaneFollowingExample.prj'); collectModelTestingResults

Position this build step before the Run MATLAB Tests build step. - Click Add build step and choose Run MATLAB Command again. Enter the command again:

openProject('SltestLaneFollowingExample.prj'); collectModelTestingResults

Position this build step after the Run MATLAB Tests build step.

- Click Add build step and choose Run MATLAB Command again. Enter the command:

- Check Simulink Test Manager results in the Run MATLAB Tests build step.

- Archive the metrics results in the derived directory. You must also archive the exported test manager results, as they will allow full navigation of the Metric results when loaded back into MATLAB.

Click Add post-build action and choose Archive the artifacts. Enter path derived/**,matlabTestArtifacts/*.mldatx to archive all files saved to that directory.

Note: To view these results in MATLAB on a machine other than the test machine, do the following:

- Download the archived artifacts (derived directory and test results .mldatx files).

- Extract and copy into a local copy of the same version of the project that was used to run the CI job.

- Open the project in MATLAB and launch the Model Testing Dashboard.

The results generated by the CI will be displayed on the dashboard.

Jenkins® is a registered trademark of LF Charities Inc.

Published 2022